DMflow.chat

ad

All-in-one DMflow.chat: Supports multi-platform integration, persistent memory, and flexible customizable fields. Connect databases and forms without extra development, plus interactive web pages and API data export, all in one step!

Description: A deep dive into the essence of visual prompt injection attacks, real-world case studies, and the latest defense strategies. This article explores this emerging AI security threat and its far-reaching implications for future technology development.

Visual prompt injection attacks exploit vulnerabilities in advanced multimodal AI systems, such as GPT-4V, by embedding hidden instructions within images. These attacks aim to manipulate the system into performing unintended actions or generating misleading outputs.

Key risks include:

Since the release of GPT-4V in September 2023, researchers have uncovered diverse methods for visual prompt injections, ranging from simple CAPTCHA bypassing to sophisticated hidden directive techniques.

A striking demonstration involved embedding specific instructions on a piece of A4 paper to achieve invisibility effects:

Researchers found ways to:

This experiment highlighted the potential for exploitation in commercial contexts:

Efforts to counter visual prompt injection attacks are underway, focusing on:

Organizations and researchers are also exploring broader solutions:

Q1: What are the primary risks of visual prompt injection attacks?

A1: Major risks include bypassing AI safeguards, misleading AI behavior, and potential misuse for malicious purposes like deceiving surveillance systems or manipulating AI decisions.

Q2: How can I identify potential visual prompt injection attacks?

A2: Look for anomalies in images, such as suspicious text, hidden instructions, or unexpected AI system behavior.

Q3: What should companies do to protect against these attacks?

A3: Companies should adopt cutting-edge security tools, keep AI systems updated, conduct regular audits, and implement robust monitoring mechanisms.

Understanding visual prompt injection attacks is crucial to navigating the challenges of AI safety in an evolving technological landscape. By staying vigilant and informed, we can better prepare for emerging threats and ensure the reliable advancement of AI technologies.

For detailed examples and further insights, explore the complete guide here:

The Beginner’s Guide to Visual Prompt Injections: Invisibility Cloaks, Cannibalistic Adverts, and Robot Women

All-in-one DMflow.chat: Supports multi-platform integration, persistent memory, and flexible customizable fields. Connect databases and forms without extra development, plus interactive web pages and API data export, all in one step!

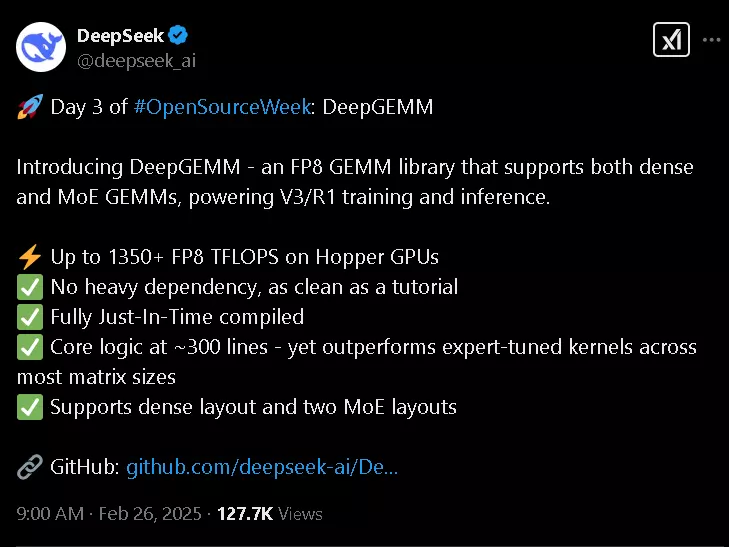

DeepSeek Open Source Week Day 3: Introducing DeepGEMM — A Game-Changer for AI Training and Infere...

Whoa, 3000GB/s? DeepSeek’s New Tool is Changing the Game for Large Language Models So, DeepSe...

DeepSeek’s Open-Source Week: Five Repos, One Mission—Community Innovation The world of artifi...

Charting the Future of AI: OpenAI’s Roadmap from GPT-4.5 (Orion) to GPT-5 If you’ve been foll...

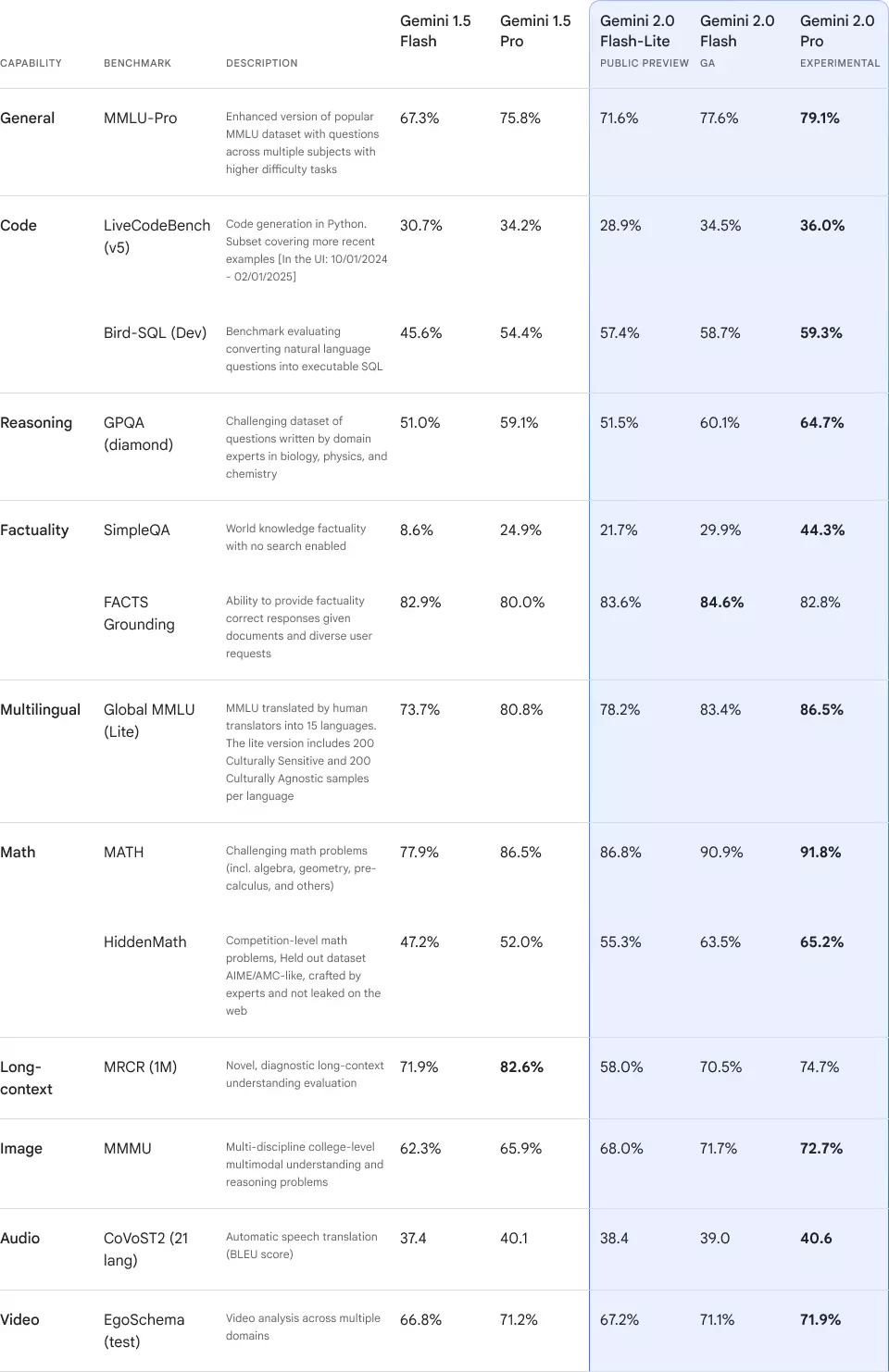

Gemini 2.0 Official Release: AI Models with Enhanced Performance Introduction In 2024, AI model...

Deep Research: A Comprehensive Analysis of ChatGPT’s Revolutionary Research Feature Introduction...

Claude Prompt Caching: Faster, More Efficient AI Conversations Anthropic introduces the new Clau...

Meta Motivo: A Breakthrough AI Full-Body Humanoid Control Model | Full Analysis and Applications ...

OpenAI Offers Limited-Time Free Fine-Tuning Service for GPT-4o Mini Model OpenAI is currently of...

By continuing to use this website, you agree to the use of cookies according to our privacy policy.