DMflow.chat

ad

DMflow.chat: Smart integration for innovative communication! Supports persistent memory, customizable fields, seamless database and form connections, and API data export for more flexible and efficient web interactions!

DeepSeek has just unveiled DeepGEMM on the third day of its “Open Source Week,” and the AI community is already buzzing. DeepGEMM is an open-source library supporting FP8 General Matrix Multiplication (GEMM), specifically designed for both intensive and Mixture-of-Experts (MoE) matrix operations. This release directly powers the training and inference processes for DeepSeek’s flagship models — DeepSeek V3 and R1.

But what makes DeepGEMM stand out? Let’s break it down.

According to DeepSeek’s official announcement on X (formerly Twitter), DeepGEMM delivers a staggering 1350+ TFLOPS of FP8 computing performance when running on NVIDIA Hopper GPUs. What’s even more impressive is that its core logic consists of only around 300 lines of code — a remarkable balance of simplicity and speed.

Here’s a snapshot of what DeepGEMM brings to the table:

Performance is key in AI model training, and DeepGEMM doesn’t disappoint. In fact, it consistently matches or even outpaces expert-tuned libraries across various matrix sizes.

| M | N | K | Computation (TFLOPS) | Speedup |

|---|---|---|---|---|

| 64 | 2112 | 7168 | 206 | 2.7x |

| 128 | 2112 | 7168 | 352 | 2.4x |

| 4096 | 2112 | 7168 | 1058 | 1.1x |

| Groups | M per Group | N | K | Computation (TFLOPS) | Speedup |

|---|---|---|---|---|---|

| 1 | 1024 | 4096 | 7168 | 1233 | 1.2x |

| 2 | 512 | 7168 | 2048 | 916 | 1.2x |

| 4 | 256 | 4096 | 7168 | 932 | 1.1x |

While DeepGEMM shows incredible speedups, the team is open about areas that still need fine-tuning. Some matrix shapes don’t perform as well, and they’re inviting the community to submit optimization pull requests (PRs).

DeepSeek isn’t just building tools for themselves — they’re investing in open collaboration. By releasing DeepGEMM, they’re giving developers worldwide a chance to push AI training and inference even further. It’s not just about DeepSeek’s models — it’s about creating an ecosystem where innovation moves at the speed of thought.

Plus, for those diving into FP8 matrix operations, DeepGEMM’s clean design means you can study the Hopper tensor cores without wading through complicated, over-engineered code.

Ready to test out DeepGEMM? Here’s what you need to get started:

Requirements:

You can access the full project and installation guide here: DeepGEMM on GitHub

DeepSeek’s Open Source Week has already introduced us to FlashMLA (a fast language model architecture) and DeepEP (expert parallel communication). With DeepGEMM, they’re further solidifying their place in the AI infrastructure space.

But this is just the beginning. With community contributions, DeepGEMM could evolve into an even more powerful tool — not just for DeepSeek’s models but for AI research at large.

So, are you ready to roll up your sleeves and explore what FP8 GEMMs can do? Let’s build the future of AI, one matrix at a time.

DMflow.chat: Smart integration for innovative communication! Supports persistent memory, customizable fields, seamless database and form connections, and API data export for more flexible and efficient web interactions!

Whoa, 3000GB/s? DeepSeek’s New Tool is Changing the Game for Large Language Models So, DeepSe...

DeepSeek’s Open-Source Week: Five Repos, One Mission—Community Innovation The world of artifi...

Charting the Future of AI: OpenAI’s Roadmap from GPT-4.5 (Orion) to GPT-5 If you’ve been foll...

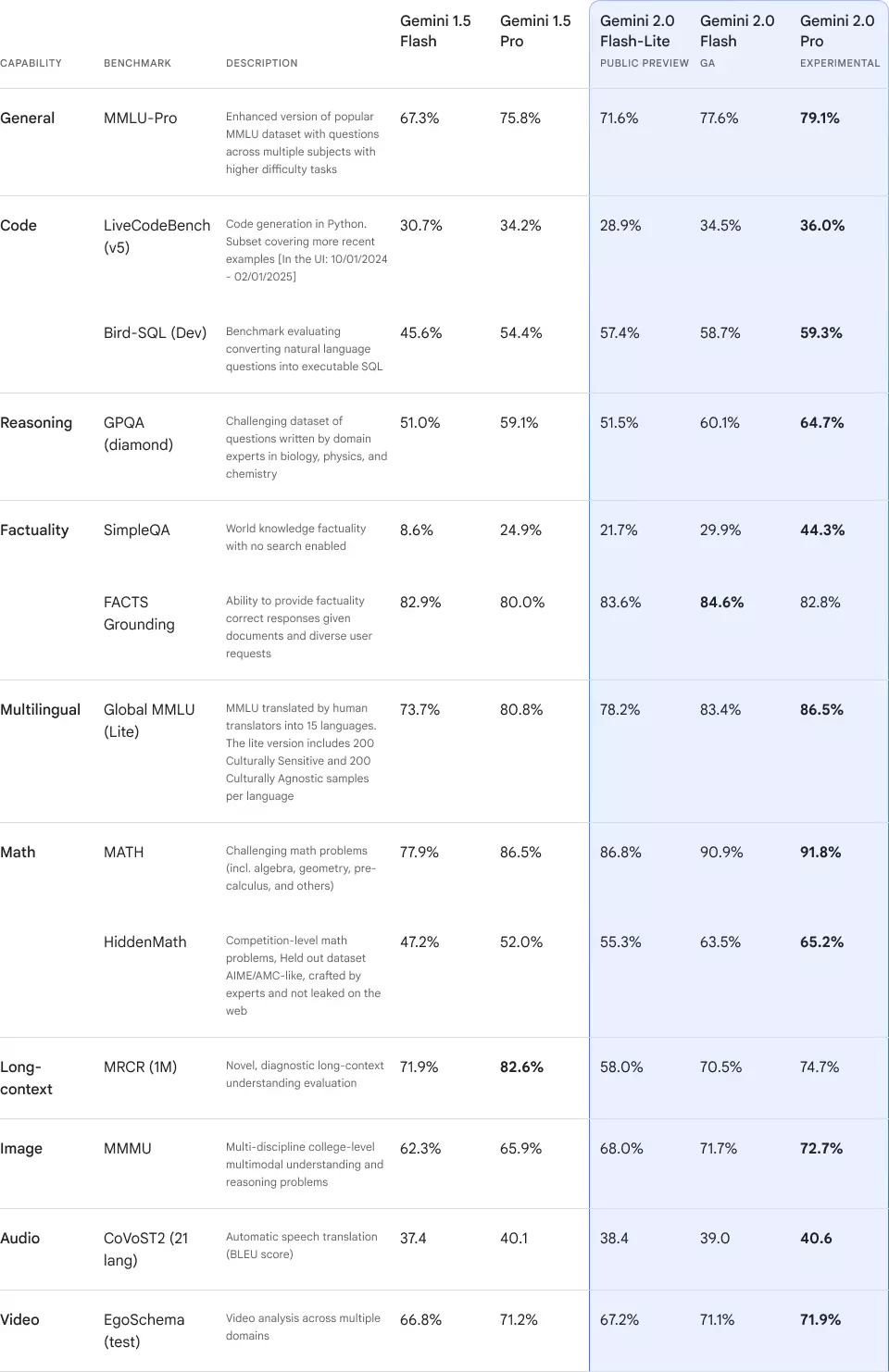

Gemini 2.0 Official Release: AI Models with Enhanced Performance Introduction In 2024, AI model...

Deep Research: A Comprehensive Analysis of ChatGPT’s Revolutionary Research Feature Introduction...

OpenAI Launches o3-mini: A New Milestone in High-Performance AI At the end of January 2025, O...

World Labs: A New Revolution in AI-Generated 3D Interactive Worlds Description World Labs, found...

The New ChatGPT Feature: Canvas - A Revolutionary Tool for Writing and Coding Description OpenAI...

RASA: The Revolutionary Force in Open Source Conversational AI Framework RASA is an open-source ...

By continuing to use this website, you agree to the use of cookies according to our privacy policy.