DeepSeek V3: A Breakthrough Open-Source Large Language Model Surpassing GPT-4 and Claude 3

At the end of 2024, China’s DeepSeek released a groundbreaking open-source language model, DeepSeek V3. This model outperformed well-known models like Claude 3.5 Sonnet and GPT-4 in various tests, showcasing remarkable performance. This article will delve into the key features, technical innovations, and practical applications of DeepSeek V3.

Core Advantages

DeepSeek V3’s outstanding performance is mainly reflected in three aspects:

1. Model Scale and Efficiency

DeepSeek V3 boasts a parameter scale of 685B (685 billion), making it one of the largest open-source language models currently available. However, what truly astonishes is its innovative use of parameters:

- Total parameters: 671B

- Parameters activated per inference: 37B

- Inference speed: Generates 60 tokens per second (3 times faster than the V2 version)

2. Breakthrough Architecture Design

Mixture of Experts (MoE) System

DeepSeek V3 adopts an advanced Mixture of Experts (MoE) architecture, which is a revolutionary technological breakthrough:

- Operating principle: Divides the model into multiple specialized “expert” sub-models

- Intelligent scheduling: Dynamically activates the most relevant experts based on input content

- Performance advantage: Significantly enhances computational efficiency and reduces resource consumption

Technical Innovation Highlights

- Multi-head Latent Attention mechanism

- Optimized DeepSeekMoE architecture

- Load balancing strategy without auxiliary loss

- Multi-token prediction training objective

3. Robust Training Foundation

Training Data

- Scale: 14.8 trillion high-quality tokens

- Characteristics: Ensures diversity and depth of knowledge

Training Process

- Utilizes supervised fine-tuning and reinforcement learning

- Total usage of 2.788M H800 GPU hours

- Stable training process, no need for rollback

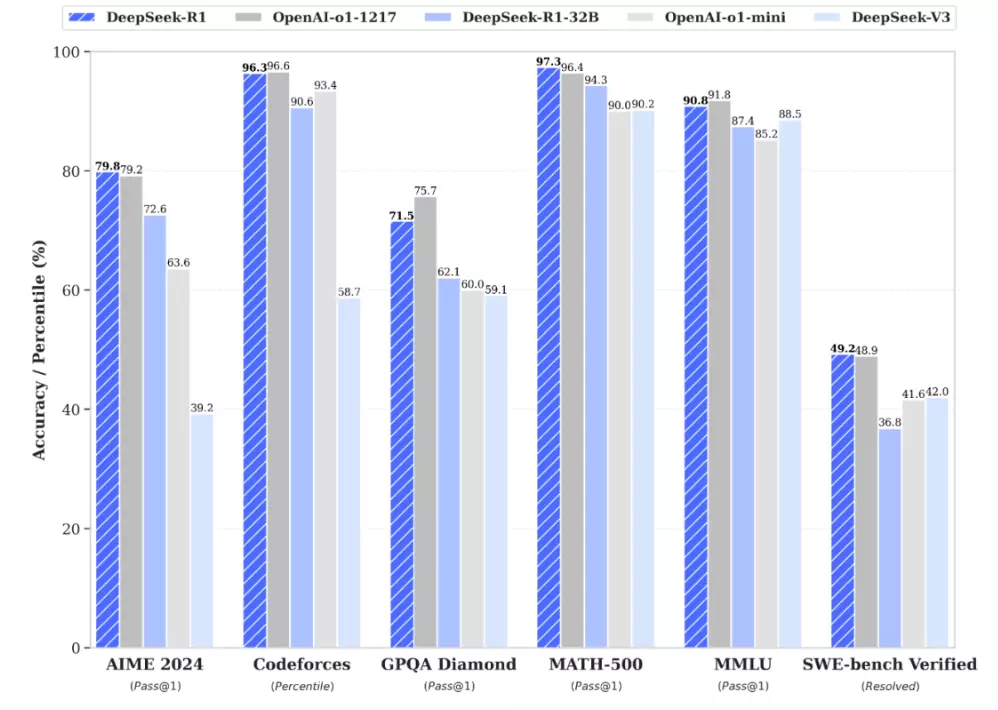

Knowledge Understanding Ability (MMLU-Pro)

- DeepSeek V3: 75.9% (second only to GPT-4’s 78%)

- Surpasses the vast majority of existing models

Complex Problem Solving (GPQA-Diamond)

- DeepSeek V3: 59.1%

- Significantly leads GPT-4 (49.9%), only behind Claude

Mathematical Reasoning Ability

- MATH 500 Test

- Score: 90.2% (best performance)

- Far exceeds other models like GPT-4

- AIME 2024 Advanced Mathematics

- Score: 39.2% (best performance)

- Leads GPT-4 by over 23%

Programming Ability

- Codeforces Test

- Score: 51.6% (best performance)

- Significantly surpasses other models

- SWE-bench Software Engineering Test

- Score: 42% (second place)

- Only behind Claude Sonnet (50.8%)

Practical Guide: How to Use DeepSeek V3?

DeepSeek V3 is open-sourced on the HuggingFace platform, and developers can directly access and use the model weights.

Frequently Asked Questions (FAQ)

Q1: What advantages does DeepSeek V3 have compared to other open-source models?

A: DeepSeek V3 has clear advantages in performance-to-price ratio, accuracy, and computational efficiency, especially excelling in mathematical reasoning and programming.

Q2: Why is the MoE architecture so important?

A: The MoE architecture can intelligently schedule model resources, ensuring strong performance while significantly improving computational efficiency, which is the key technical foundation for DeepSeek V3’s outstanding performance.

Q3: What application scenarios is DeepSeek V3 suitable for?

A: With its excellent overall performance, it is particularly suitable for professional applications in mathematical calculations, programming development, and knowledge Q&A, while also being capable of general language understanding and generation tasks.

Conclusion

The release of DeepSeek V3 marks an important milestone for open-source large language models. Its superior performance in multiple key areas, combined with its open-source nature, makes it one of the most valuable AI language models currently available. Whether for academic research or commercial applications, DeepSeek V3 shows immense potential for development.

Additional Resources