DeepSeek-V3-0324 Launches: Free for Commercial Use & Runs on Consumer Hardware!

Introduction

DeepSeek has once again quietly shaken up the industry with the release of its latest large language model – DeepSeek-V3-0324. This massive 641GB AI model suddenly appeared on the Hugging Face platform with little to no prior announcement, quickly becoming a hot topic within the AI community.

Unlike many competitors, DeepSeek has not only released its model weights for free but also allows free commercial use, completely disrupting the prevalent paywall model in the AI industry. Even more surprisingly, this model can run on high-end consumer computers, eliminating the need for expensive data center-grade infrastructure.

Completely Open: Breaking Down Paywalls with MIT License

While companies like OpenAI and Anthropic choose to keep their high-performance AI locked behind subscription paywalls, DeepSeek-V3 takes the opposite approach with an open strategy.

- Free Download: Users can directly download the full model weights from Hugging Face.

- Commercially Available: The model is released under the MIT open-source license, meaning businesses and developers can freely use it in commercial environments without hefty licensing fees.

This stands in stark contrast to the closed strategies of many US AI companies, making DeepSeek’s open model highly competitive.

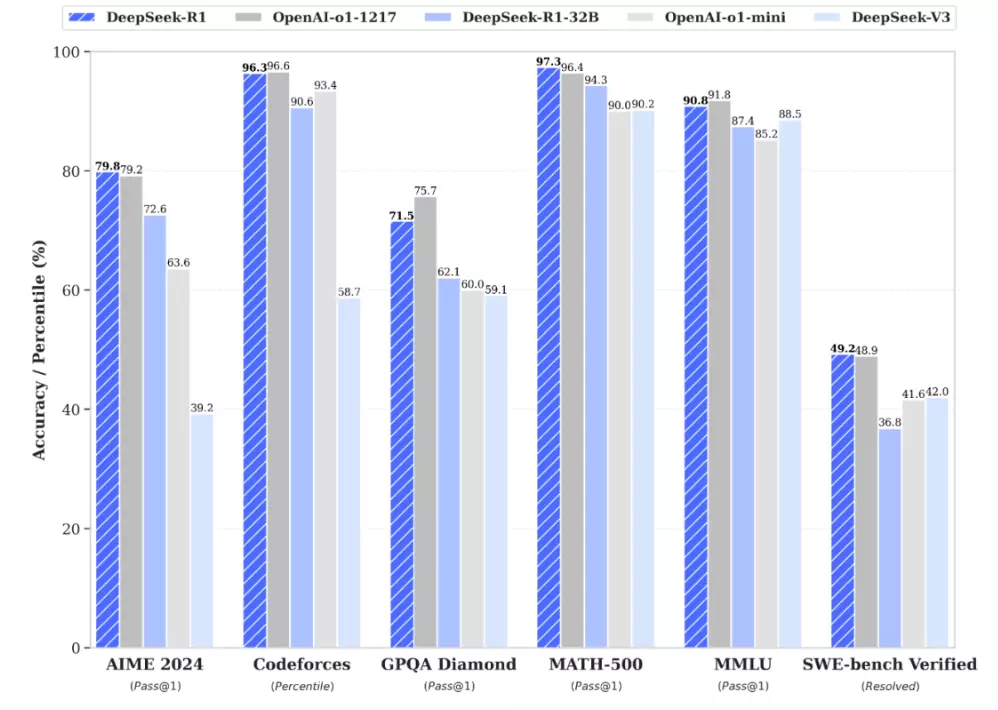

MoE Architecture + Two Key Breakthroughs Boost Efficiency

DeepSeek-V3 utilizes the Mixture of Experts (MoE) architecture, a revolutionary AI design approach that gives it a significant advantage in computational efficiency.

What is MoE?

Traditional AI models activate all their parameters for every computation, leading to massive resource consumption. The ingenuity of the MoE architecture lies in dynamically activating different combinations of parameters based on the specific task, significantly reducing unnecessary computational load.

In DeepSeek-V3-0324, the total parameter count reaches 685 billion, but it only activates around 37 billion parameters per computation. This means it maintains high performance while drastically reducing hardware requirements.

Two Key Innovations

- MLA (Multi-Head Latent Attention)

- Allows the model to maintain context memory for longer durations, making conversations more coherent and preventing the AI from “forgetting” earlier parts of the conversation.

- MTP (Multi-Token Prediction)

- While traditional AI generates only one token at a time, DeepSeek-V3 can generate multiple tokens simultaneously, boosting response speed by over 80% and significantly reducing latency.

The integration of these technologies allows DeepSeek-V3-0324 to approach the performance of larger, closed AI systems without requiring the same massive computational resources.

Runs on High-End Consumer Computers!

Another groundbreaking feature of DeepSeek-V3-0324 is its significantly lower barrier to entry for running. This allows individuals and small to medium-sized businesses to run the AI on high-end consumer hardware, without relying on cloud computing.

Prominent developer tools expert Simon Willison noted that through 4-bit quantization, the model size can be reduced to 352GB, enabling high-performance consumer hardware to run this AI.

AI researcher Awni Hannun mentioned on social media:

“DeepSeek-V3-0324 runs on a Mac Studio M3 Ultra with 512GB of memory at speeds of 20 tokens per second!”

While a $9,499 Mac Studio is still not a budget device, compared to server architectures often costing hundreds of thousands of dollars, this capability undoubtedly makes AI technology more accessible and democratized.

Furthermore, the Mac Studio’s AI computation consumes less than 200 watts, a stark contrast to the thousands of watts consumed by typical AI server GPUs, significantly reducing both running costs and environmental impact.

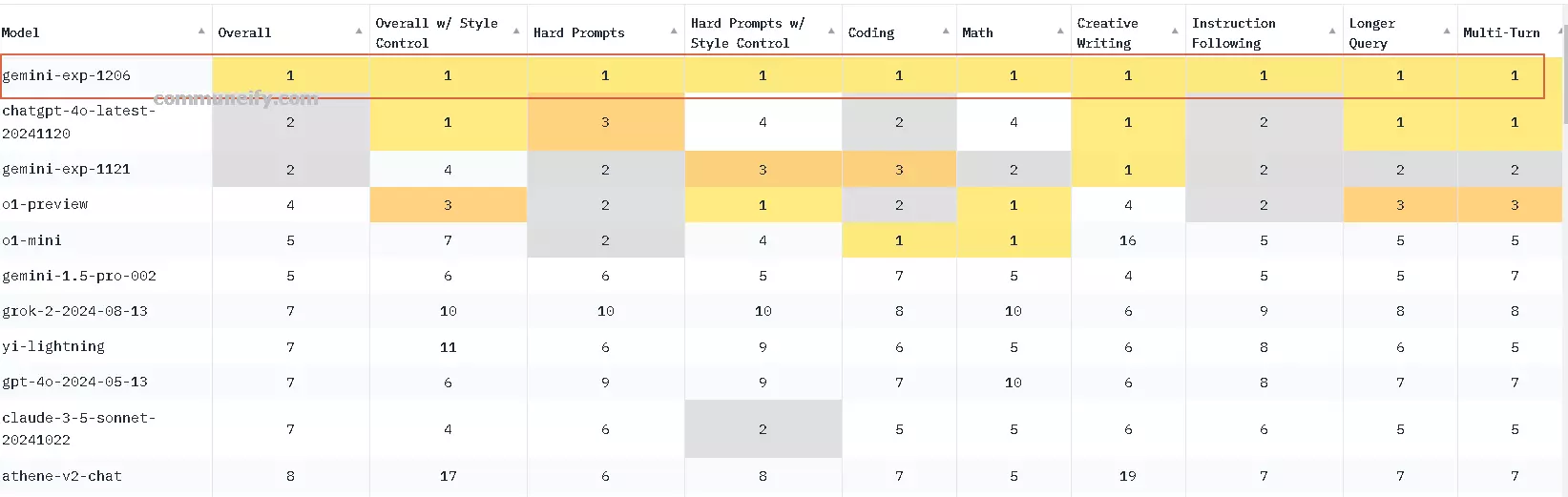

China AI vs. US AI: Diverging Development Paths

DeepSeek’s open strategy also highlights a fundamental difference between the Chinese AI industry and Western AI companies.

- US AI Companies (e.g., OpenAI, Anthropic): Emphasize closed ecosystems, monetizing through paywalls.

- Chinese AI Companies (e.g., DeepSeek, Baidu, Alibaba, Tencent): Tend more towards open source, allowing businesses, researchers, and developers to freely utilize AI technology.

Chinese AI companies, often facing limitations in accessing the most advanced Nvidia GPUs, tend to focus more on efficiency optimization and resource allocation. This constraint might ironically become a competitive advantage.

The release of DeepSeek-V3-0324 might just be the beginning of the next major breakthrough – DeepSeek is planning the upcoming DeepSeek-R2, which could potentially become the next “open-source GPT-5,” further impacting the global AI market landscape.

Conclusion: The Dawn of an Open AI Era?

DeepSeek-V3-0324 represents a new paradigm in AI development: efficient, open-source, and accessible.

Currently, users can download the full model directly from Hugging Face or experiment with the API via OpenRouter. The official DeepSeek chat interface may also be updated to the new version soon.

Amidst a trend towards increasingly closed AI systems, DeepSeek’s open-source strategy undoubtedly offers a distinct path forward for developers and businesses worldwide. This contest between “open vs. closed” – who will prevail remains to be seen.