DeepSeek, a rapidly rising AI company, has unveiled a series of new multimodal AI models named Janus-Pro, claiming they outperform OpenAI’s DALL-E 3. These models, with parameter sizes ranging from 1 billion to 7 billion, are available for download on the AI development platform Hugging Face. Generally, larger parameter sizes correlate with better model performance. Janus-Pro is licensed under MIT, enabling unrestricted commercial use.

Janus-Pro: A Strong Candidate for Next-Gen Multimodal Models

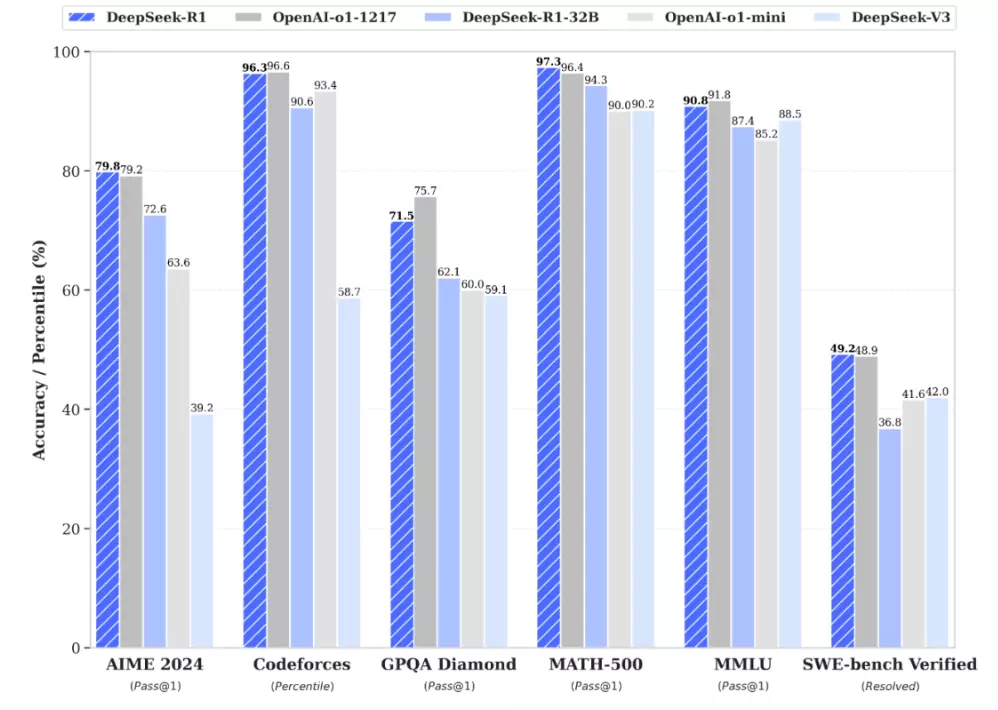

Described as an “innovative autoregressive framework,” Janus-Pro excels in both image analysis and generation. According to DeepSeek’s tests on benchmarks like GenEval and DPG-Bench, the largest model, Janus-Pro-7B, not only surpasses DALL-E 3 but also outperforms models like PixArt-alpha, Emu3-Gen, and Stability AI’s Stable Diffusion XL.

Although some of the compared models are older and Janus-Pro is limited to analyzing small images of up to 384 x 384 resolution, its compact size and efficiency make its performance impressive. In a post on Hugging Face, DeepSeek stated:

“Janus-Pro surpasses previous unified models and matches or even exceeds task-specific models. Its simplicity, flexibility, and effectiveness position it as a strong contender for next-generation unified multimodal AI.”

Below, we’ll explore Janus-Pro’s technical features, strengths, and impact on the AI field.

Core Technology: Decoupled Visual Encoding

At the heart of Janus-Pro is its “innovative autoregressive framework”, which cleverly decouples visual encoding. Traditional multimodal models often use a single visual encoder for both understanding and generating images, leading to conflicts that limit performance. Janus-Pro separates these tasks into two distinct paths while still using a unified Transformer architecture.

-

Image Understanding Path:

This path employs SigLIP-L as its visual encoder, a powerful visual Transformer designed to process 384 x 384 resolution inputs. It focuses on extracting semantic information from images and converting it into a format that language models can understand.

-

Image Generation Path:

Utilizing the tokenizer from LlamaGen, this path converts images into discrete token sequences with a downsampling rate of 16. It generates new images step-by-step based on instructions from the language model.

This decoupled design reduces conflicts between understanding and generation tasks while increasing flexibility. For instance, the paths can be optimized independently for specific tasks, boosting overall performance.

What is an Autoregressive Framework?

An autoregressive framework predicts the next output based on previous outputs. In language processing, it predicts the next word in a sequence. In image generation, it predicts the next pixel or image block, building the image incrementally. Janus-Pro uses this method to generate images step-by-step until completion.

Advantages of Janus-Pro: Outshining Task-Specific Models

Janus-Pro’s decoupled visual encoding and autoregressive framework offer several key advantages:

-

Higher Accuracy:

By separating image understanding and generation, Janus-Pro captures semantic details more precisely and produces images that better match instructions. This explains its exceptional performance on benchmarks like GenEval and DPG-Bench.

-

Greater Flexibility:

The decoupled design allows task-specific adjustments. For example, the understanding path can be fine-tuned for caption generation, while the generation path can be optimized for image editing.

-

Improved Efficiency:

Despite its strong performance, Janus-Pro’s smaller parameter sizes require fewer computational resources, reducing deployment costs. This efficiency is crucial for promoting the adoption of multimodal AI technologies.

Why Janus-Pro’s Small Size Matters

In AI, larger models often mean better performance, but Janus-Pro proves that smaller models can shine. Its compact size enables:

-

Lower Costs:

Smaller models need less computational power, reducing training and deployment expenses.

-

Faster Processing:

Compact models generate results more quickly.

-

Wider Accessibility:

Smaller models can run on various devices, including mobile platforms, broadening AI applications.

Impact on the AI Field

Janus-Pro represents a significant breakthrough, showcasing DeepSeek’s technological prowess and shaping the future of multimodal AI.

-

Advancing Multimodal AI:

Janus-Pro’s innovative decoupled visual encoding and autoregressive framework set new design standards for multimodal models.

-

Promoting Accessibility:

Its efficiency and low costs lower the barrier to AI adoption, expanding its applications across industries.

-

Intensifying Competition:

DeepSeek’s rapid rise, especially with its chatbot topping the Apple App Store, has drawn attention from analysts and experts. Janus-Pro’s release is expected to heighten the competitive landscape in AI.

Licensing and Accessibility

Janus-Pro is licensed under MIT, a permissive open-source license allowing free use, modification, and distribution, including for commercial purposes. This will accelerate Janus-Pro’s adoption and advance multimodal AI technology.

Conclusion

DeepSeek’s Janus-Pro is a groundbreaking innovation in multimodal AI. Its unique architecture, exceptional performance, compact size, and open licensing make it a strong competitor for next-generation unified models. As DeepSeek continues to evolve, it is poised to play an increasingly significant role in shaping the future of AI.

FAQs

Q: What can Janus-Pro be used for?

A: Applications include:

- Image Captioning: Generating descriptive text from images.

- Image Editing: Modifying images based on text instructions.

- Visual Q&A: Answering questions about image content.

- Story Creation: Generating image sequences to tell a story.

Q: How can I start using Janus-Pro?

A: Visit DeepSeek’s GitHub repository for downloads and usage instructions.

Q: Does Janus-Pro support Chinese?

A: Yes. As a Chinese AI lab, DeepSeek’s models are expected to have strong support for Chinese.