DMflow.chat

ad

All-in-one DMflow.chat: Supports multi-platform integration, persistent memory, and flexible customizable fields. Connect databases and forms without extra development, plus interactive web pages and API data export, all in one step!

This article summarizes best practices for building high-performance large language model (LLM) AI agents based on practical experience. It explores different agent system architectures, from simple workflows to autonomous agents, and provides guidance on when to use each approach. Additionally, the article delves into the role of frameworks and emphasizes the importance of simplicity, transparency, and well-designed agent-computer interfaces (ACI).

Image Source: ChatGPT 4o

Key Difference: Workflows follow fixed paths, while AI agents can adapt flexibly.

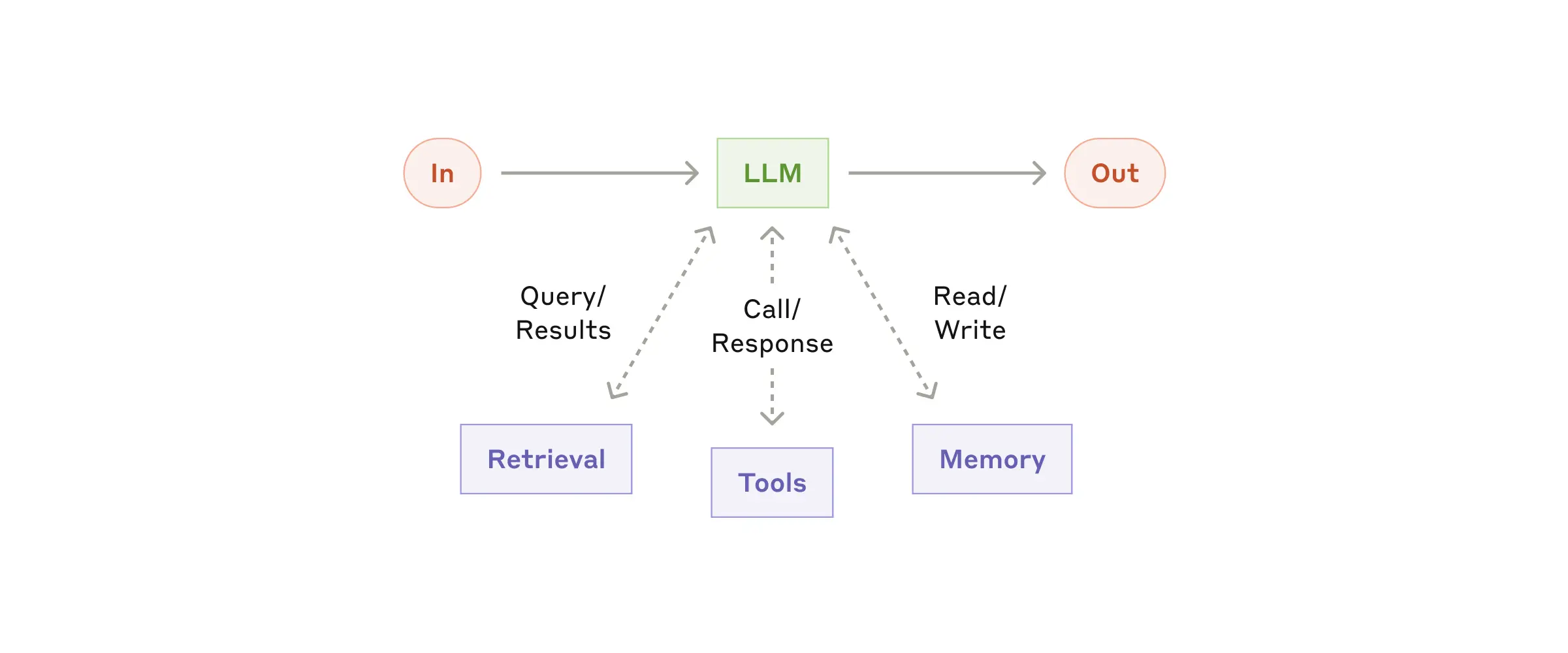

Key Point: Adjust augmentation features based on specific use cases and ensure they provide a clear, comprehensive interface for the LLM.

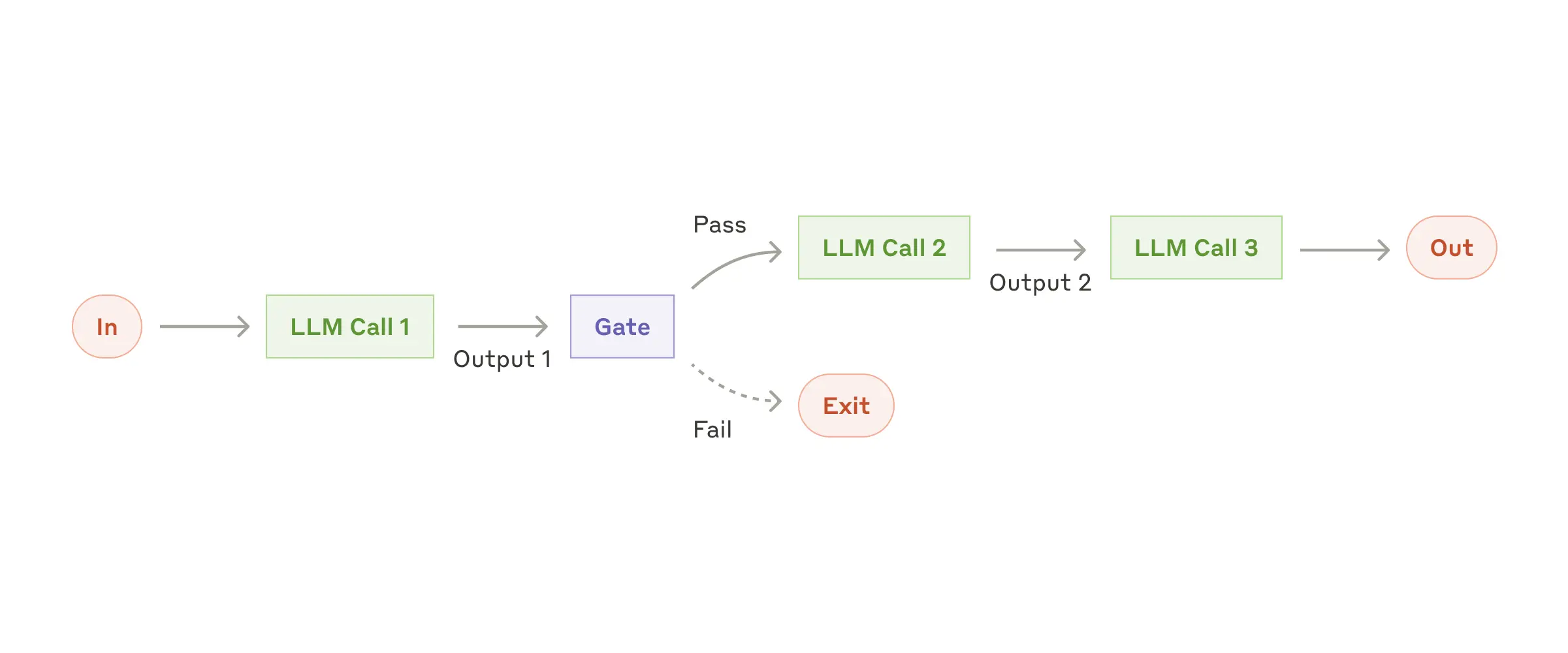

Overview: Break down tasks into a series of steps, with each LLM call handling the output of the previous step. When to Use: Suitable for tasks that can be clearly broken down into fixed subtasks, trading latency for higher accuracy.

Example Use Cases:

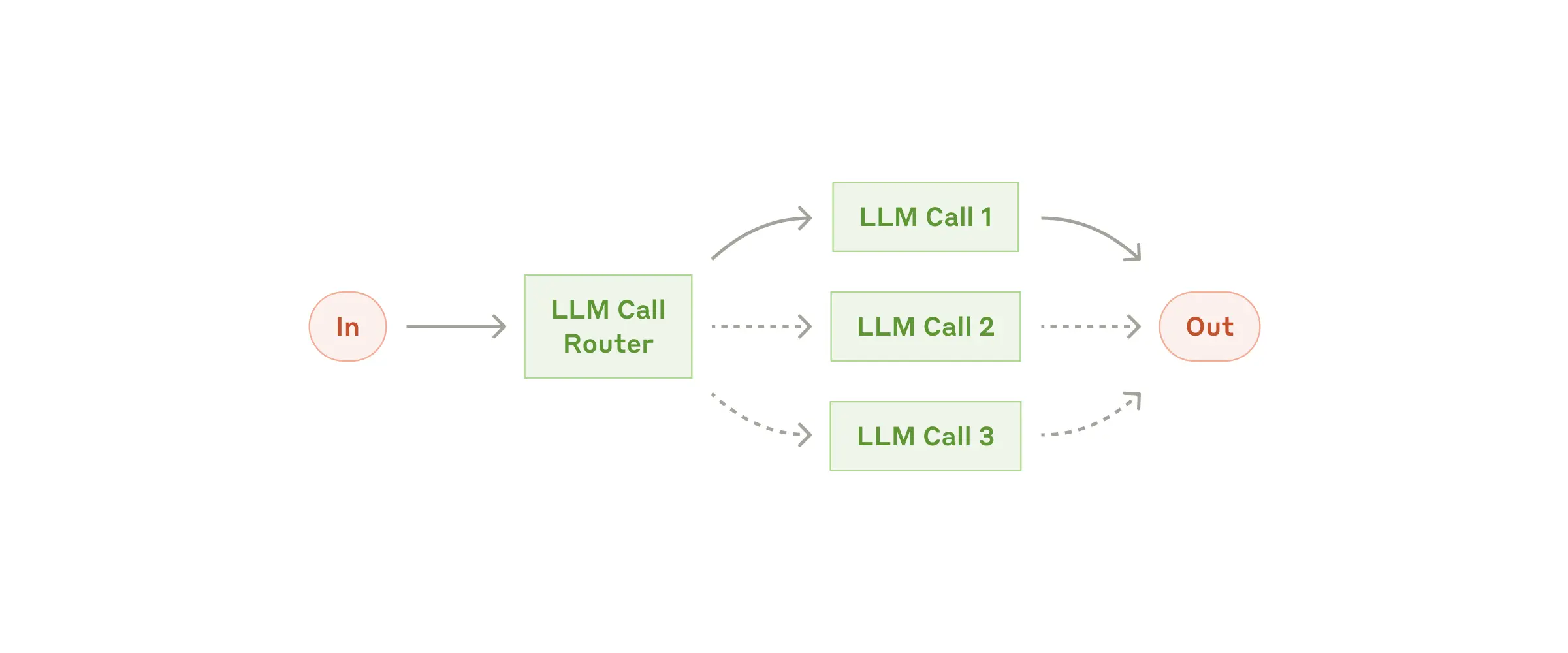

Overview: Classify input and direct it to specialized subsequent tasks, achieving prompt specialization and separation of concerns. When to Use: Suitable for complex tasks with different categories that are best handled separately and can be accurately classified.

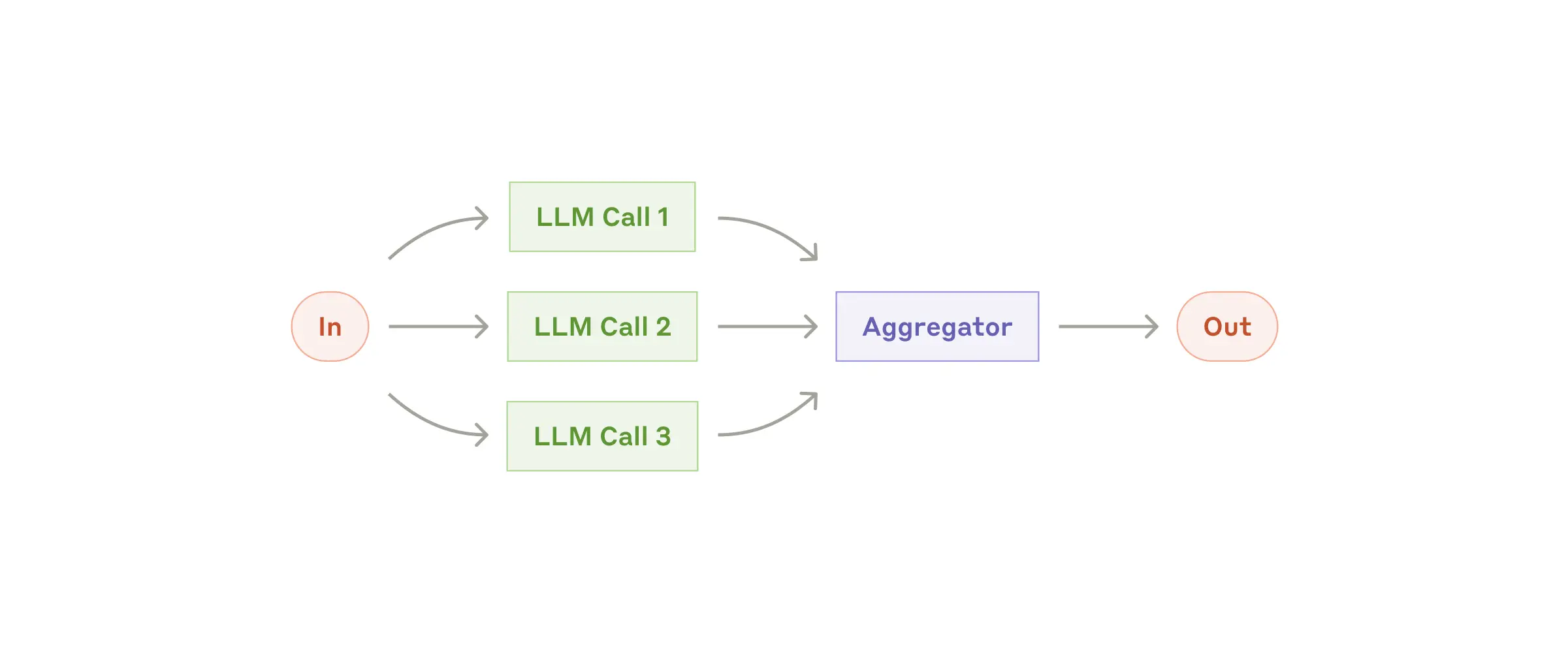

Overview: LLMs process a task simultaneously and programmatically aggregate their outputs. Two Main Variants: * Sectioning: Break down a task into independent subtasks and execute them in parallel. * Voting: Run the same task multiple times to get different outputs. When to Use: Effective when subtasks can be processed in parallel to increase speed, or when multiple perspectives or attempts are needed for higher confidence results.

Sectioning Use Cases:

Voting Use Cases:

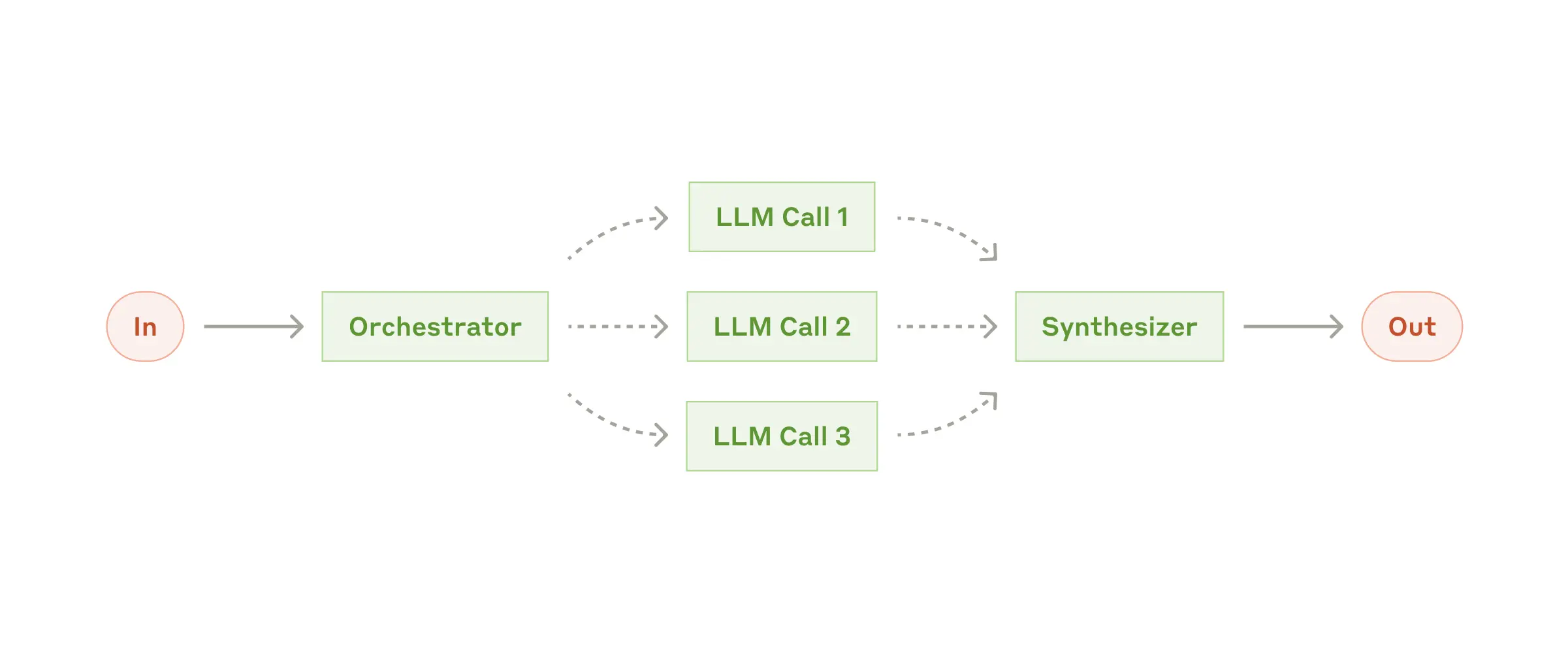

Overview: A central LLM dynamically breaks down tasks, delegates them to worker LLMs, and integrates their results. When to Use: Suitable for complex tasks where the required subtasks cannot be predicted (e.g., in programming, the number of files to be changed and the nature of changes in each file may depend on the task).

Example Use Cases:

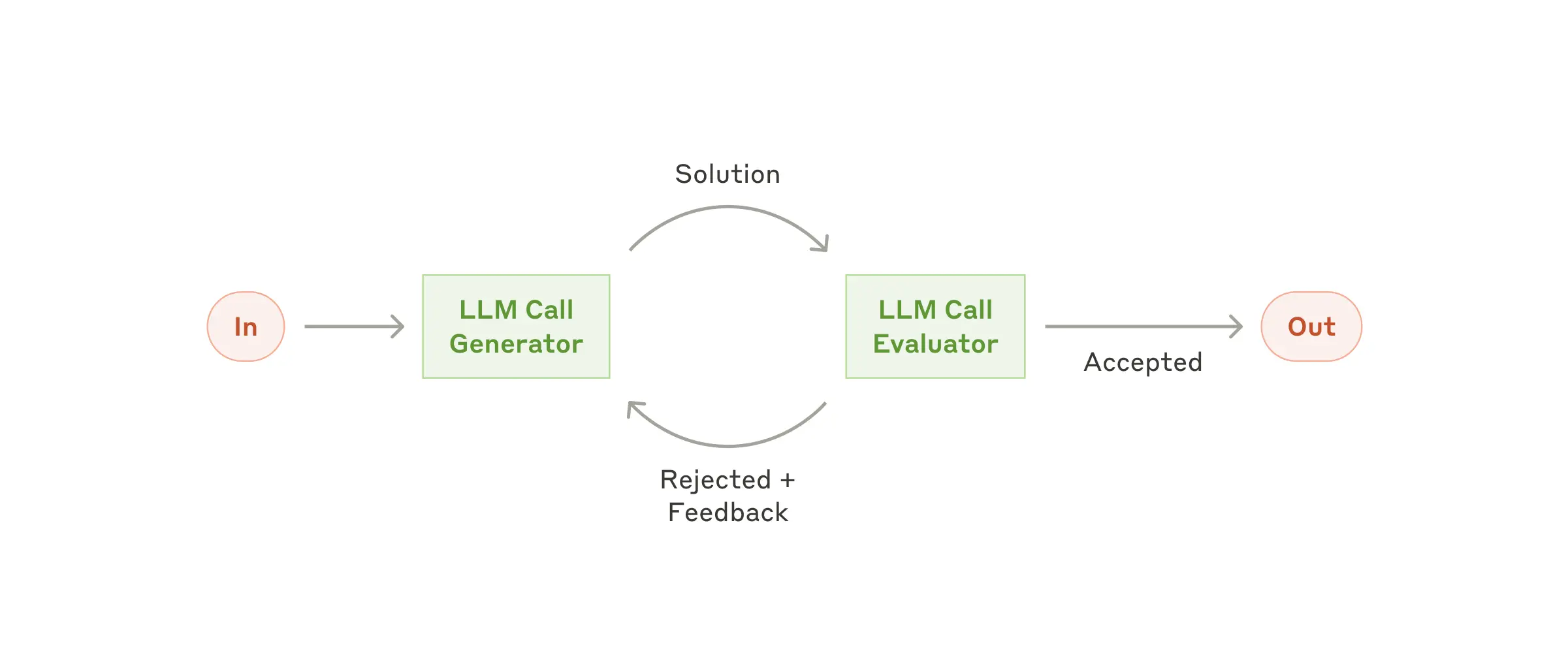

Overview: One LLM generates a response, while another LLM provides evaluation and feedback in a loop. When to Use: Particularly effective when there are clear evaluation criteria, and iterative improvement can yield measurable value.

Example Use Cases:

Overview: AI agents operate autonomously after receiving commands or engaging in interactive discussions with users. They plan and execute independently and may seek human input for clarification or judgment. When to Use: Suitable for open-ended problems where the number of steps is hard to predict and cannot be hard-coded into a fixed path. Requires a certain level of trust in the LLM’s decision-making.

Example Use Cases:

These patterns are not rigid rules but starting points that can be combined and adjusted according to specific needs. The key is to measure performance and iterate, only adding complexity when it significantly improves results.

All-in-one DMflow.chat: Supports multi-platform integration, persistent memory, and flexible customizable fields. Connect databases and forms without extra development, plus interactive web pages and API data export, all in one step!

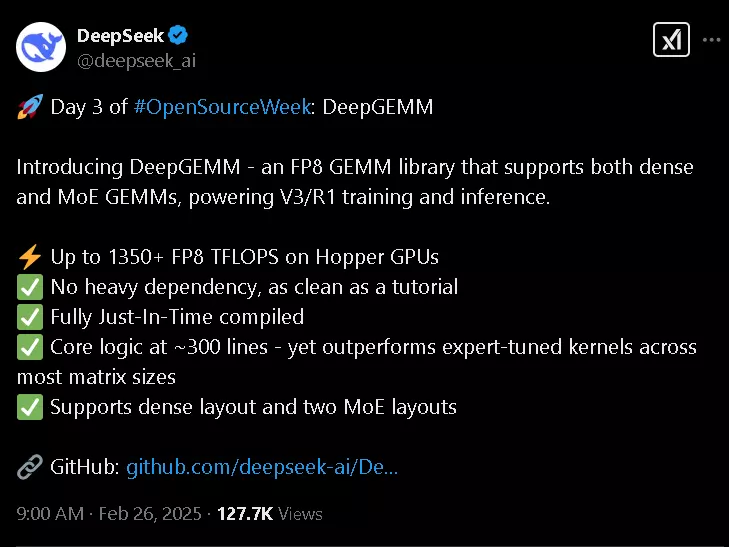

DeepSeek Open Source Week Day 3: Introducing DeepGEMM — A Game-Changer for AI Training and Infere...

Whoa, 3000GB/s? DeepSeek’s New Tool is Changing the Game for Large Language Models So, DeepSe...

DeepSeek’s Open-Source Week: Five Repos, One Mission—Community Innovation The world of artifi...

Charting the Future of AI: OpenAI’s Roadmap from GPT-4.5 (Orion) to GPT-5 If you’ve been foll...

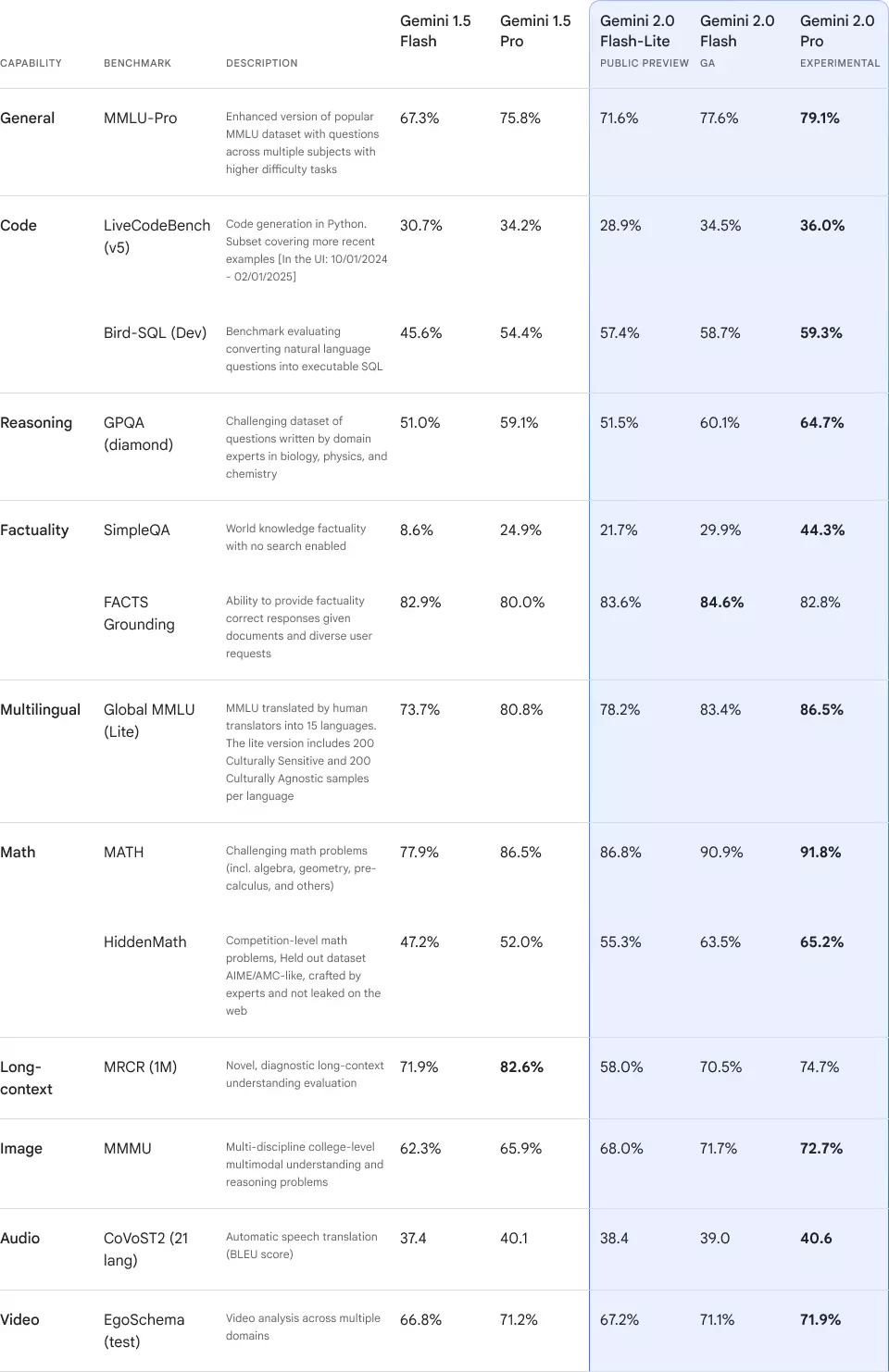

Gemini 2.0 Official Release: AI Models with Enhanced Performance Introduction In 2024, AI model...

Deep Research: A Comprehensive Analysis of ChatGPT’s Revolutionary Research Feature Introduction...

Mistral Large 2: A Breakthrough in AI Language Models Mistral Large 2 is a next-generation large...

DeepSeek’s Open-Source Week: Five Repos, One Mission—Community Innovation The world of artifi...

Anthropic’s Major Update: Claude 3.5 Series Release and Revolutionary Computer Control Feature A...

By continuing to use this website, you agree to the use of cookies according to our privacy policy.