Mistral Large 2: A Breakthrough in AI Language Models

Mistral Large 2 is a next-generation large language model that offers exceptional cost-efficiency, speed, and performance. It supports multiple languages and programming languages, demonstrating superior performance in various benchmark tests. This article provides an in-depth overview of Mistral Large 2’s features, performance, and applications.

Overview of Mistral Large 2

Mistral Large 2 is a powerful language model with a 128k context window. It supports dozens of languages, including French, German, Spanish, Italian, Portuguese, Arabic, Hindi, Russian, Chinese, Japanese, and Korean. Additionally, it supports over 80 programming languages, such as Python, Java, C, C++, JavaScript, and Bash.

This model is designed for single-node inference, making it particularly suitable for long-context applications. With 123 billion parameters, it achieves high throughput on a single node. Mistral Large 2 is released under the Mistral research license, permitting use for research and non-commercial purposes. For commercial use, a Mistral commercial license is required.

General Performance

Mistral Large 2 sets new standards for performance/service cost on evaluation metrics. Specifically, in the MMLU (Massive Multi-Task Language Understanding) test, the pre-trained version achieved an 84.0% accuracy, setting a new performance/cost Pareto frontier among open models.

Code and Inference Capabilities

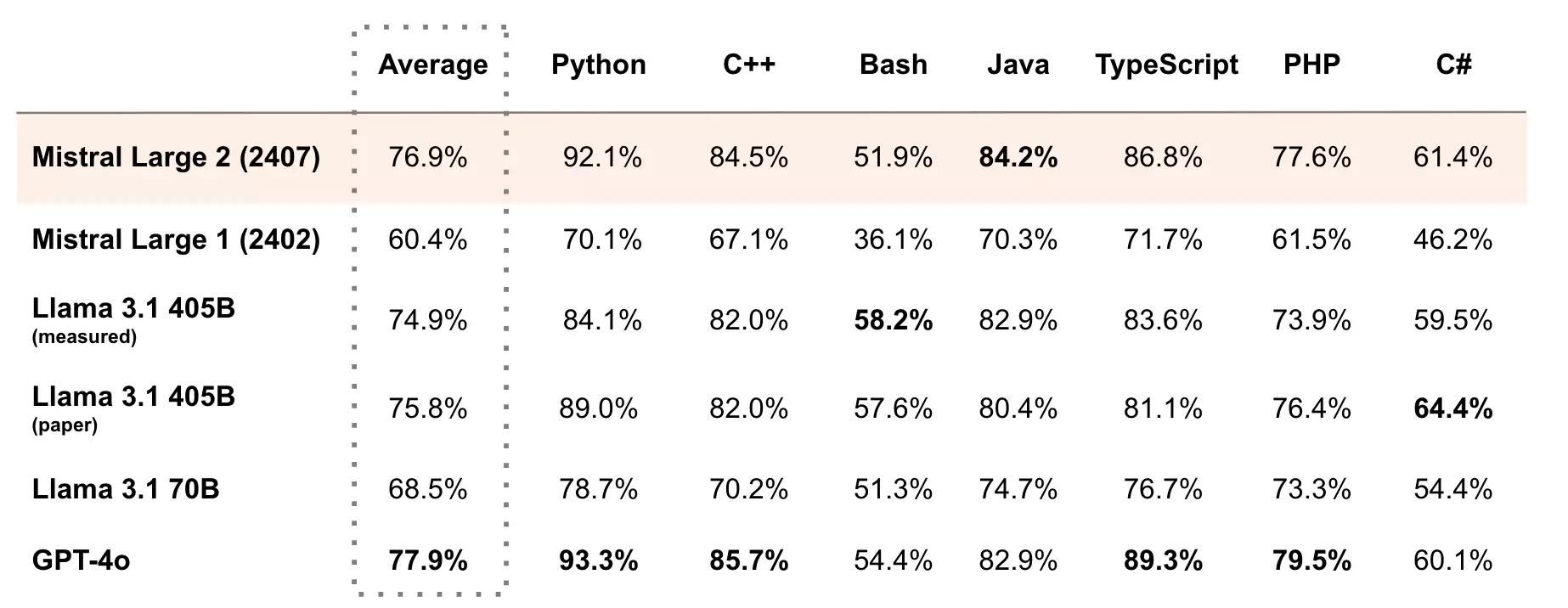

Based on experiences with Codestral 22B and Codestral Mamba, Mistral Large 2’s training included extensive code data. Its performance significantly surpasses that of the previous Mistral Large and matches leading models like GPT-4, Claude 3 Opus, and Llama 3 405B.

In enhancing the model’s inference capabilities, the development team focused on minimizing the model’s tendency to produce “hallucinations” (generating plausible but incorrect or irrelevant information). Through fine-tuning, the model responds more cautiously and accurately, ensuring reliable output.

Moreover, Mistral Large 2 is trained to honestly admit when it cannot find a solution or lacks sufficient information to provide an exact answer. This commitment to accuracy is reflected in improved performance on mathematical benchmarks, showcasing its enhanced reasoning and problem-solving abilities.

Image from mistral-large-2407

Image from mistral-large-2407

Instruction Following and Alignment

Mistral Large 2 has significantly improved instruction-following and conversational abilities. The new version excels in following precise instructions and handling long multi-turn conversations. It performs exceptionally well in MT-Bench, Wild Bench, and Arena Hard benchmarks.

It’s worth noting that while generating longer responses might improve scores on some benchmarks, conciseness is crucial in many commercial applications. Shorter model generations enable faster interactions and are more cost-effective in inference. Therefore, the development team has invested significant effort to ensure the generated content remains concise whenever possible.

Language Diversity

Given the substantial number of commercial use cases involving multilingual documents today, Mistral Large 2’s training includes a large proportion of multilingual data. It performs well in English, French, German, Spanish, Italian, Portuguese, Dutch, Russian, Chinese, Japanese, Korean, Arabic, and Hindi. In the multilingual MMLU benchmark, Mistral Large 2 outperforms its predecessor, Mistral Large, as well as Llama 3.1 models and Cohere’s Command R+.

Tool Use and Function Calls

Mistral Large 2 features enhanced function calling and retrieval skills, trained to proficiently execute parallel and sequential function calls, making it a powerful engine for complex commercial applications.

Try Mistral Large 2 on la Plateforme

Users can now access Mistral Large 2 through la Plateforme, with the model named mistral-large-2407, available for testing on le Chat. It is offered in version 24.07 (YY.MM versioning system), with the API named mistral-large-2407. The weights for the instruction model are available and hosted on HuggingFace.

Accessing Mistral Models via Cloud Service Providers

Mistral AI has partnered with leading cloud service providers to bring the new Mistral Large 2 to global users. Notably, they have expanded their collaboration with Google Cloud Platform, providing Mistral AI’s models through Vertex AI’s managed API. Mistral AI’s top models are now available on Vertex AI, Azure AI Studio, Amazon Bedrock, and IBM watsonx.ai.

These partnerships make Mistral Large 2 more accessible, offering powerful AI tools to developers and enterprises worldwide to drive innovation and enhance efficiency.