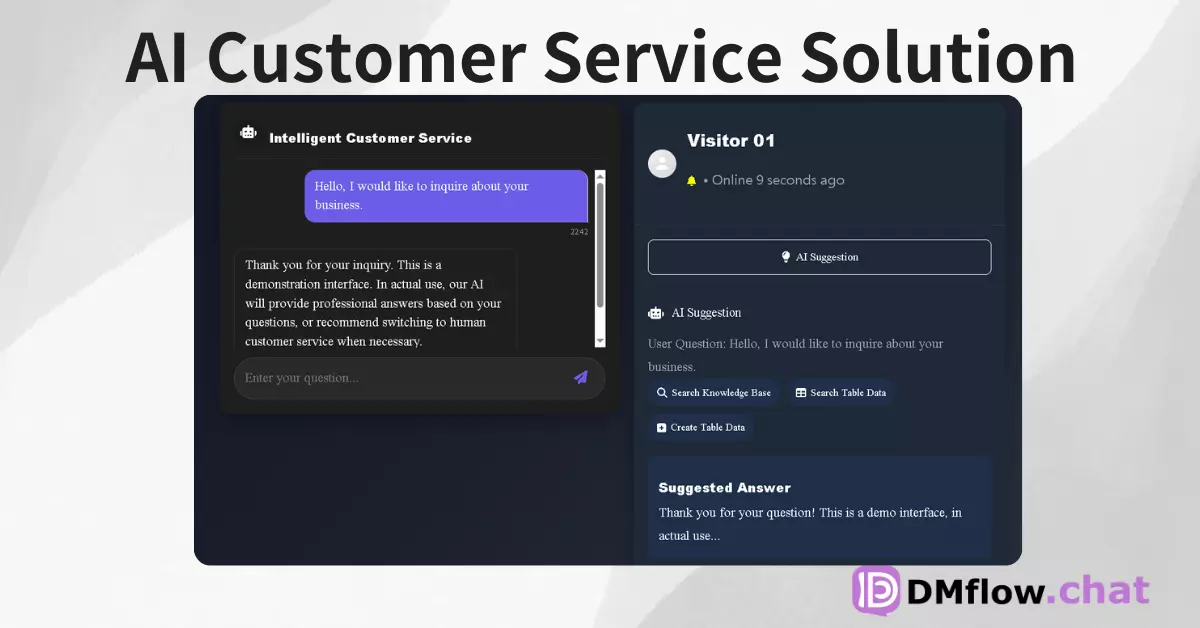

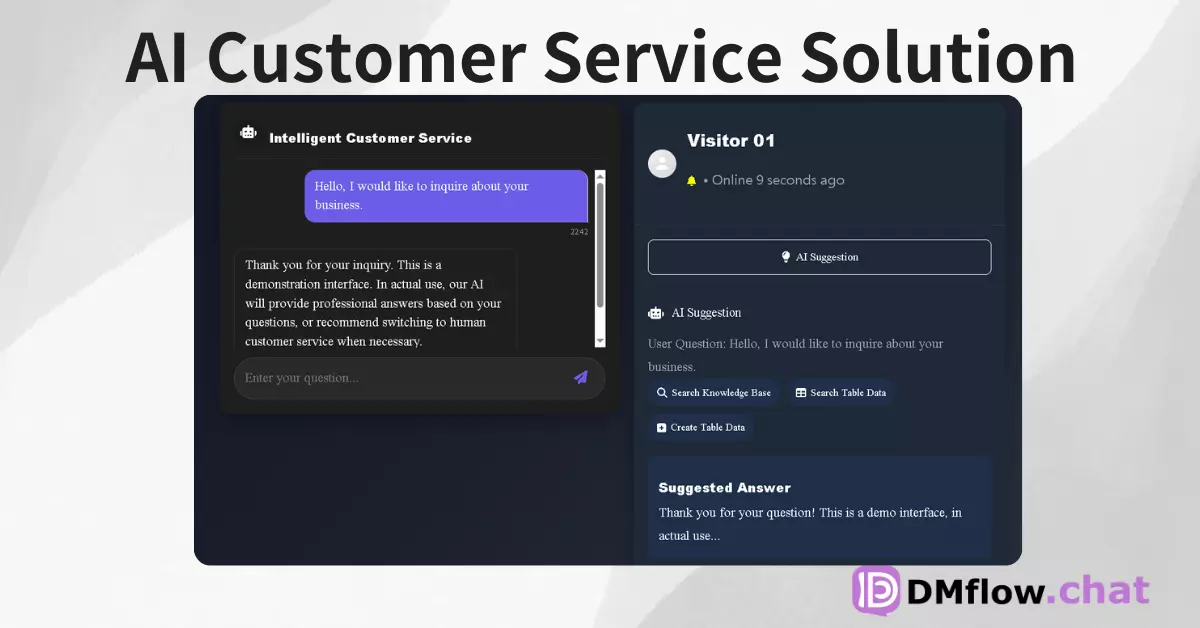

DMflow.chat

ad

DMflow.chat: Intelligent integration that drives innovation. With persistent memory, customizable fields, seamless database and form connectivity, plus API data export, experience unparalleled flexibility and efficiency.

OpenAI has launched its latest o-series AI models: o3 and o4-mini. They’re not only smarter and more powerful, but also boast deeper reasoning capabilities and better tool integration. They can even “understand” and interact with images. Let’s see how this evolution in AI might transform our work and lives!

Hey, talking about AI—does it feel like there’s something new every day? Just yesterday (April 16, 2025), tech giant OpenAI surprised us again by officially unveiling the latest members of its o-series: OpenAI o3 and o4-mini.

And this time, it’s no small update—these two models have been trained to “think before they speak,” engaging in longer and deeper reasoning before giving answers. According to OpenAI, these are their most intelligent and capable models to date, marking a major leap in what ChatGPT can do for everyone.

Sounds impressive, right? So let’s dive in—what exactly has changed this time?

In short, the key upgrades in o3 and o4-mini are their enhanced reasoning capabilities and tool integration. They can browse the web, analyze uploaded files (yes, with Python!), deeply understand images, and even generate visuals.

Crucially, they’re trained to know when and how to use these tools to provide more detailed and thoughtful answers—often solving complex problems in under a minute. This makes them far more capable of handling multifaceted queries, and moves us closer to a truly autonomous “agent-style” ChatGPT.

This blend of elite reasoning and comprehensive tool access sets a new benchmark for both intelligence and usability, whether in academic benchmarks or real-world applications.

OpenAI o3 represents the peak of OpenAI’s current reasoning capabilities. It’s raised the bar in coding, math, science, and visual perception. It’s set new records on platforms like Codeforces (competitive programming), SWE-bench (software engineering), and MMMU (multimodal understanding).

It excels in tackling complex problems that require multifaceted analysis and don’t have obvious answers. It especially shines in visual tasks—analyzing images, charts, and diagrams. According to external assessments, o3 makes 20% fewer critical errors than its predecessor o1, particularly in areas like coding, business consulting, and creative ideation.

Early testers say o3 is like a thinking partner, especially in biology, math, and engineering. Its ability to generate and evaluate hypotheses is seriously impressive.

OpenAI o4-mini is a smaller, speed-optimized, cost-effective model. But don’t underestimate it—its performance is stunning given its size and cost, particularly in math, code, and vision tasks. It outperformed all other models in the 2024 and 2025 AIME (American Invitational Mathematics Examination).

Experts also found it beats its predecessor o3-mini in non-STEM tasks and data science. Thanks to its efficiency, o4-mini has fewer usage restrictions than o3, making it a great choice for high-frequency reasoning tasks. It’s incredibly cost-effective!

Beyond raw reasoning power, o3 and o4-mini also feel more natural in conversation. Thanks to improved intelligence and web integration, they remember more from earlier interactions, making responses feel more personalized and relevant than ever.

This is a major highlight of the update! For the first time, o3 and o4-mini can directly integrate images into their “chain of thought.” They don’t just see images—they think with them.

This unlocks a new way to solve problems that blends visual and textual reasoning. You can upload a whiteboard photo, textbook diagram, or even a sketch—blurry, upside-down, or low-quality images included—and the models can still interpret them.

Even cooler: using tools, the models can manipulate the images—rotate, zoom, transform—as part of the reasoning process. For more technical details, check out their visual reasoning blog post.

As mentioned, the models learned how to use tools, but more importantly, they were trained—via reinforcement learning—to decide when and how to use them.

This decision-making ability makes them more capable in open-ended scenarios, especially those involving visual reasoning and multi-step workflows.

For example, if you ask, “How will California’s summer energy usage compare to last year?” the model might:

And if it finds new info mid-process, it can adjust its strategy—performing multiple searches, refining queries, and making real-time decisions. This adaptability helps it handle tasks requiring up-to-date information beyond its training data.

According to charts released by OpenAI (see original post), o3 and o4-mini outperform their predecessors (like o1 and o3-mini) not only in intelligence but also in cost-effectiveness.

For example, in the AIME 2025 math benchmark, o3’s cost-performance curve outshines o1’s. Likewise, o4-mini beats o3-mini. Bottom line: in most real-world scenarios, o3 and o4-mini are expected to be smarter and possibly cheaper than o1 and o3-mini. The data backs up OpenAI’s bold claims.

OpenAI emphasized that o3 and o4-mini have undergone their most rigorous safety evaluations yet. According to their updated Preparedness Framework, the risk levels in tracked areas—biology, chemistry, cybersecurity, and AI self-improvement—remain below the defined “high risk” threshold.

They’ve also added rejection prompts for high-risk domains like biohazards, malware generation, and jailbreaks. Additionally, they trained a reasoning LLM monitor, based on human-written and interpretable safety standards, to flag dangerous prompts. Reportedly, in red team exercises focused on bio-risk, it flagged about 99% of concerning conversations. You can view full assessment results in the accompanying system card.

OpenAI also revealed a new experiment: Codex CLI. It’s a lightweight code agent you can run directly in your terminal. Designed to fully leverage models like o3 and o4-mini, it will also support GPT-4.1 and other API models in the future.

You can send it screenshots or low-fidelity sketches, and it can combine that with access to your local codebase—bringing multimodal reasoning right to your command line. OpenAI calls it a minimalist interface linking users, their computers, and AI. Good news: Codex CLI is now fully open source at github.com/openai/codex.

They’ve also launched a $1 million fund to support projects using Codex CLI and OpenAI models.

You won’t have to wait long!

OpenAI also plans to launch OpenAI o3-pro with full tool support in the coming weeks. For now, Pro users can still use o1-pro.

For developers, o3 and o4-mini are available today via the Chat Completions API and Responses API (some may require organization verification). The Responses API supports reasoning summaries and retains surrounding tokens for better performance. Soon, it will also support web search, file search, and code interpreter tools.

| model | tier |

|---|---|

| o4-mini | 1–5 |

| o3 | 4–5 |

If you’re in tier 1–3 and want to access o3, head over to the API Organization Verification page.

This update clearly signals OpenAI’s future direction: combining the o-series’ powerful reasoning with GPT’s more natural conversation and tool integration. By merging these strengths, future models will support seamless, intuitive dialogue—paired with proactive tool use and high-level problem-solving.

In short, o3 and o4-mini represent a major leap in AI capability. They’re smarter, more capable of reasoning, more hands-on, and now even “see” and use tools proactively. For all of us who use AI daily, this is seriously exciting news. Let’s see how these powerful models shape the next wave of innovation!

DMflow.chat: Intelligent integration that drives innovation. With persistent memory, customizable fields, seamless database and form connectivity, plus API data export, experience unparalleled flexibility and efficiency.

OpenAI Launches GPT-4o Image Generation with Multi-Turn Editing On March 25, 2025, OpenAI announ...

OpenAI Codex CLI: Your Terminal AI Coding Companion – Quickstart Guide & Practical Tips T...

Mastering GPT-4.1 Prompting: A Practical Guide to Unlocking Next-Gen AI Power Explore OpenAI’...

OpenAI GPT-4.1 API is Here: Faster, Smarter, and Better at Following Your Commands! OpenAI ha...

Farewell GPT-4! OpenAI Announces Major Update as GPT-4o Takes the Lead The wave of artificial...

Say Goodbye to Goldfish Memory! ChatGPT Launches Memory Feature for a Smarter AI Experience O...

Free Your Hands! A Deep Dive into the Power of N8N Automation: Features, Use Cases, and Limitless...

What’s All the Hype About LangChain? An AI Dev Super Tool Even Beginners Can Understand! Want...

Fine-Tuning for GPT-4o Now Available: A New Opportunity to Enhance AI Performance and Precision ...

By continuing to use this website, you agree to the use of cookies according to our privacy policy.