DMflow.chat

ad

DMflow.chat: Intelligent integration that drives innovation. With persistent memory, customizable fields, seamless database and form connectivity, plus API data export, experience unparalleled flexibility and efficiency.

Explore Magic’s groundbreaking research on 100M token context windows and its collaboration with Google Cloud. Discover how ultra-long context models are revolutionizing AI learning and their potential applications in software development.

Image credit: https://magic.dev/blog/series-a

Image credit: https://magic.dev/blog/series-a

Artificial Intelligence (AI) learning is undergoing significant changes. Traditionally, AI models learn in two main ways: through training and by learning from context during inference. However, with the emergence of ultra-long context windows, this paradigm is set to shift dramatically.

Magic’s Long-Term Memory (LTM) model can handle up to 100 million tokens of context during inference, equivalent to around 10 million lines of code or the content of 750 novels. This capability opens up revolutionary possibilities for AI in software development.

Imagine an AI model that can incorporate all your code, documentation, and libraries—even those not on the public internet—into its context. The quality of code synthesis could be vastly improved, leading to increased development efficiency, fewer errors, and enhanced code quality.

Traditional methods for evaluating long contexts have some limitations. For example, the commonly used “needle in a haystack” evaluation places a random fact (the needle) in the middle of a long context window (the haystack) and asks the model to retrieve it. However, this method may teach the model to recognize anomalies rather than genuinely understanding and processing long contexts.

To address this, Magic designed a new evaluation method called HashHop. This method uses hash pairs to test the model’s storage and retrieval capabilities, ensuring it can handle the maximum amount of information.

The steps of HashHop are as follows:

This method not only evaluates single-step reasoning but also tests multi-step reasoning and cross-context reasoning, making it more aligned with real-world applications.

Magic recently trained its first 100-million-token context model, LTM-2-mini. This model excels in handling long contexts, particularly in efficiency and memory requirements compared to traditional models.

Key advantages of LTM-2-mini include:

LTM-2-mini also shows potential in code synthesis, producing reasonable outputs for tasks like creating a calculator using a custom GUI framework and implementing a password strength meter, despite its smaller scale compared to current top models.

To further advance its research and development, Magic has formed a strategic partnership with Google Cloud. The collaboration focuses on:

This partnership will greatly enhance Magic’s inference and training efficiency, providing rapid scaling capabilities and access to a rich ecosystem of cloud services.

As Magic trains larger LTM-2 models on its new supercomputers, we can anticipate more exciting breakthroughs:

These advancements will not only push the boundaries of AI technology but could also bring transformative changes across various industries.

Q: What is an ultra-long context window, and why is it important?

A: An ultra-long context window allows AI models to handle vast amounts of information during inference, such as Magic’s LTM models, which can process up to 100 million tokens of context. This is crucial for improving AI performance in complex tasks, especially in fields like software development that require extensive contextual information.

Q: What are the features of Magic’s LTM-2-mini model?

A: LTM-2-mini is a model capable of handling 100 million tokens of context, with a sequence dimension algorithm that is much more efficient than traditional models and significantly reduced memory requirements. It performs exceptionally well in HashHop evaluations and shows promise in code synthesis.

Q: What impact will Magic’s collaboration with Google Cloud have?

A: This collaboration will enable Magic to leverage Google Cloud’s powerful computing resources and AI tools, accelerating the training and deployment of its models. This could lead to the rapid development of more robust and efficient AI models, driving progress across the AI industry.

Q: What potential impact do ultra-long context models have on software development?

A: These models could revolutionize code synthesis and software development processes by understanding and managing larger codebases, offering more accurate suggestions, and automating more complex programming tasks, thereby greatly improving development efficiency and code quality.

Q: What are the advantages of the HashHop evaluation method?

A: HashHop evaluates a model’s storage and retrieval abilities using random, uncompressible hashes, avoiding the implicit semantic cues in traditional methods. This approach better reflects a model’s performance in real-world applications, especially in tasks requiring multi-step reasoning.

DMflow.chat: Intelligent integration that drives innovation. With persistent memory, customizable fields, seamless database and form connectivity, plus API data export, experience unparalleled flexibility and efficiency.

7-Day Limited Offer! Windsurf AI Launches Free Unlimited GPT-4.1 Trial — Experience Top-Tier AI N...

Eavesdropping on Dolphins? Google’s AI Tool DolphinGemma Unlocks Secrets of Marine Communication ...

WordPress Goes All-In! Build Your Website with a Single Sentence? Say Goodbye to Website Woes wit...

The Great AI Agent Alliance Begins! Google Launches Open-Source A2A Protocol, Ushering in a New E...

Llama 4 Leaked Training? Meta Exec Denies Cheating Allegations, Exposes the Grey Zone of AI Model...

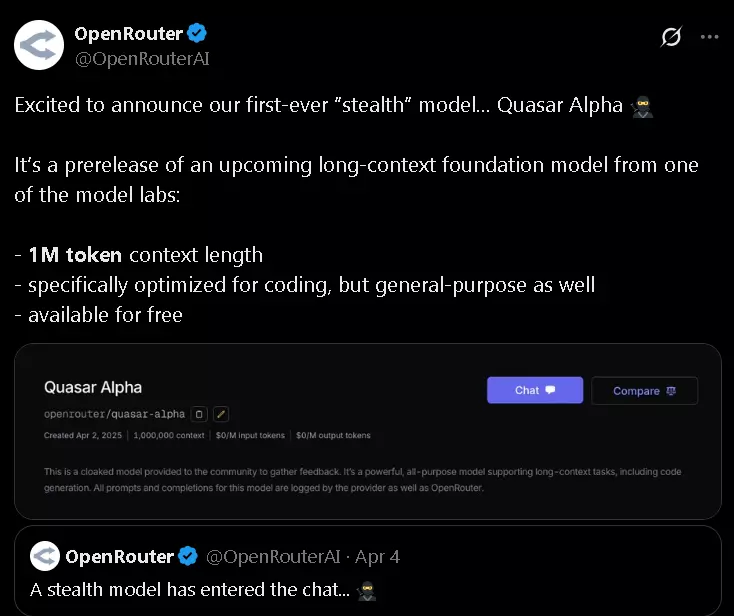

Secret Weapon Unleashed? OpenRouter Silently Drops Million-Token Context Model Quasar Alpha! ...

OpenAI to Release an Open-Source Reasoning Model: A Game-Changer in AI OpenAI is set to relea...

How to Use Felo to Replace Traditional Web Searching Tired of getting lost in the vast sea of...

Trae: The Next-Generation AI Code Editor, Unleashing Your Development Potential In today’s ra...

By continuing to use this website, you agree to the use of cookies according to our privacy policy.