MuseTalk Deep Dive: The Real-Time, High-Fidelity AI Lip Sync Powerhouse from Tencent Music

Explore MuseTalk, a technology developed by Tencent Music’s Lyra Lab. Discover how this open-source AI model enables real-time, high-quality video lip-syncing, supports multiple languages, and what technical innovations the latest version 1.5 brings along with its application potential.

Have you ever imagined a tool that makes a person in a video speak naturally in sync with any audio, in real time? In the past, achieving this was a time-consuming and complex process. But now, AI technology is changing the game. Today, we’re diving into an incredible tool launched by Tencent Music Entertainment Group’s (TME) Lyra Lab — MuseTalk.

In short, MuseTalk is an AI model designed for real-time, high-quality lip-syncing. Imagine feeding it a piece of audio and watching a character’s face — especially their lips — move in perfect sync with the sound. And the results look impressively natural. Even more impressively, MuseTalk can run at over 30 frames per second on GPUs like the NVIDIA Tesla V100. What does that mean? Real-time processing is actually possible!

And MuseTalk isn’t just a lab prototype. It’s already open-sourced on GitHub, and the model is also available on Hugging Face. For developers and creators, this is fantastic news.

What Exactly Is MuseTalk?

At its core, MuseTalk modifies an unseen face based on input audio. It works on a 256 x 256 facial region, focusing on mouth movement, chin, and other key areas to ensure perfect sync with the voice.

Some standout features include:

- Multilingual capability: Whether you input Mandarin, English, or Japanese, MuseTalk can handle it — thanks to a powerful audio processing backbone. Theoretically, it could support even more languages if the audio model supports them.

- Real-time inference: That 30fps+ speed is a big deal. It makes MuseTalk perfect for use cases like virtual avatars, real-time dubbing, or live translation. Of course, the actual performance depends on your hardware, especially your GPU. For local runs, you’ll want a decent graphics card.

- Adjustable face center point: This is a cool feature — you can manually specify the facial focus region. Why is this important? Because different faces or angles may require different focus areas for optimal sync. By adjusting this, you can significantly improve the quality of the results.

- Training data foundation: The model is trained on HDTF (a high-quality facial video dataset) along with some private datasets, providing a strong base for realistic generation.

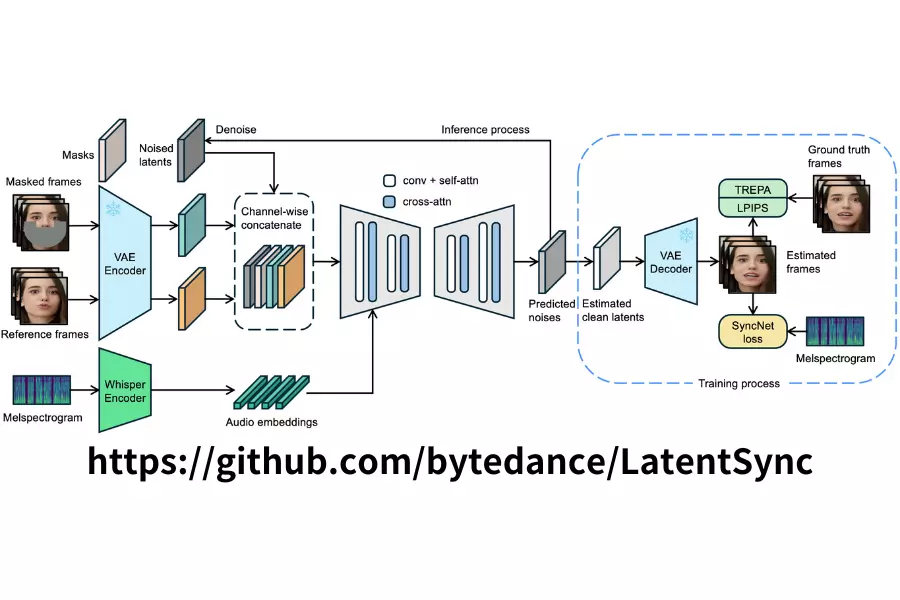

Behind the Scenes: The Technical Magic of MuseTalk

MuseTalk works in a clever way. Rather than modifying the raw image directly, it operates in something called latent space. Think of it as compressing the image into a “core representation,” performing the modifications there, and then reconstructing the image.

Core technical components include:

- Image Encoder (VAE): Uses a fixed, pre-trained VAE model (

ft-mse-vae) to encode the image into latent space.

- Audio Encoder: Based on OpenAI’s

Whisper-tiny model (also fixed and pre-trained) to extract features from the audio. Whisper is renowned for its multilingual capabilities.

- Generation Network: This part is inspired by the UNet architecture used in Stable Diffusion. Audio features are injected via cross-attention, guiding the model to generate correct mouth shapes in the latent image.

One important distinction: While MuseTalk uses a UNet structure similar to Stable Diffusion, it is not a diffusion model. Diffusion models usually require multiple denoising steps. MuseTalk, by contrast, performs single-step latent-space inpainting, which is one reason it can run in real time.

Sounds complex? Here’s a simpler analogy: imagine combining the “instruction” from audio with the “canvas” of an image (in compressed form), then using a powerful “brush” (the generation network) to paint the correct lip motion.

Going Further: The Glorious Upgrade in MuseTalk 1.5

Technology always moves forward, and MuseTalk is no exception. In early 2025, the team released MuseTalk 1.5, featuring major improvements:

- Enhanced loss functions: In addition to standard losses (like L1 loss), version 1.5 introduces Perceptual Loss, GAN Loss, and Sync Loss.

- Perceptual and GAN Loss help improve visual realism and facial detail.

- Sync Loss focuses on making sure lip movements precisely match the audio. You can read more in their technical report.

- Two-stage training strategy: This helps balance visual quality with lip-sync accuracy through a more sophisticated training process.

- Spatio-Temporal Sampling: A smarter data sampling method during training, allowing the model to learn more coherent and accurate mouth dynamics.

These upgrades boost MuseTalk 1.5’s performance in clarity, identity preservation, and lip-sync accuracy — significantly outperforming earlier versions. Even better, the inference code, training scripts, and model weights for version 1.5 are now fully open source! This opens the door for community development and research.

Applications and Potential

What can MuseTalk actually do? Turns out, quite a lot:

- Virtual avatar systems: Combined with tools like MuseV (another video generation tool from the same lab), you can build realistic talking virtual humans.

- Film and TV dubbing: Significantly speeds up dubbing workflows for movies, shows, or animations, and potentially cuts costs. Multilingual versions become much easier to produce.

- Content creation: YouTubers, streamers, or social media creators can use it to make more engaging content — like making a photo or painting “talk.”

- Education & accessibility: Quickly add synced voiceovers to instructional videos or provide visual speech cues for the hearing impaired.

- Real-time translated visual dubbing: In live conferences or streams, sync translated speech with a speaker’s mouth movements — a promising application for cross-language communication (though this still requires additional integration work).

How to Get Started with MuseTalk?

If you’re interested in trying MuseTalk yourself, here are some great starting points:

- GitHub repository: https://github.com/TMElyralab/MuseTalk

- It contains full source code, installation instructions, and usage examples.

- Be sure to check the

README for hardware/software requirements.

- Hugging Face model page: https://huggingface.co/TMElyralab/MuseTalk

- Here you can directly download pre-trained model weights.

- Technical report: https://arxiv.org/abs/2410.10122

- For those interested in technical details, the research paper is available here.

Since MuseTalk’s training code is open source, experienced developers can even fine-tune or retrain it using custom datasets for specialized use cases.

Frequently Asked Questions (FAQ)

Here are answers to some common questions:

- Q: Is MuseTalk free to use?

- A: Yes, MuseTalk’s code and pre-trained models are open source and generally follow open-source licenses like Apache 2.0 (check the license file on GitHub for details). You’re free to use, modify, and distribute it under those terms.

- Q: How does MuseTalk compare to other lip-sync models like Wav2Lip?

- A: MuseTalk’s main strengths are its real-time performance and high-quality output in v1.5 (thanks to GAN loss, etc.). Unlike some models, it works in latent space and isn’t a diffusion model. Wav2Lip is also excellent, but MuseTalk might have an edge in responsiveness and output quality, depending on your use case.

- Q: What kind of computer do I need to run MuseTalk?

- A: Official benchmarks mention 30fps+ on an NVIDIA Tesla V100 — a professional-grade GPU. For personal use, you’ll likely need a solid NVIDIA GPU (e.g., RTX 20, 30, or newer series) with sufficient VRAM. Check GitHub for specific hardware recommendations.

- Q: Is adjusting the “face center point” really that important?

- A: Yes — the team emphasizes that adjusting this can have a significant impact. The model needs to know where to focus on the face. If automatic detection struggles (e.g., due to unique angles or occlusions), manual adjustment helps the model target the mouth area more accurately and generate more natural results.

Final Thoughts

MuseTalk is undoubtedly a major step forward in AI-powered content creation. It not only showcases Tencent Music Lyra Lab’s expertise in audio-visual AI but also brings powerful tools to developers and creators through open source.

From real-time virtual interactions to efficient dubbing workflows, MuseTalk opens the door to countless possibilities. With continued technical advancement and community collaboration, we can expect to see even more innovative applications. If you’re into AI video generation, virtual avatars, or just want to make your photos “sing,” MuseTalk is definitely worth exploring.