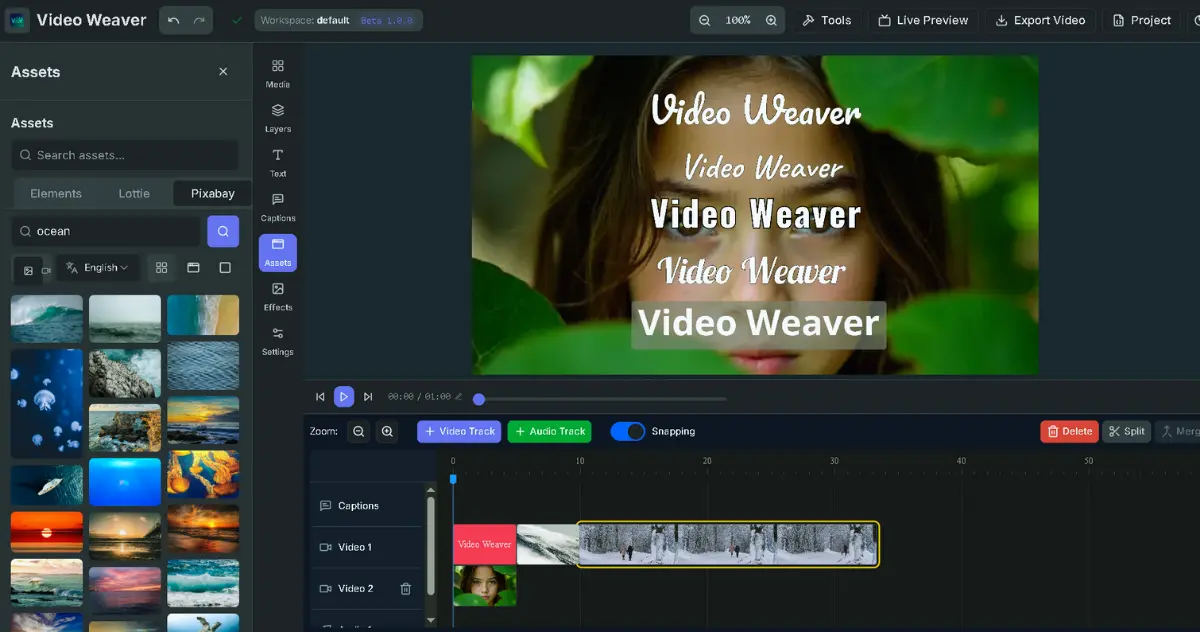

Google’s newly released Gemma 2 2B model surpasses large models like GPT-3.5 and Mixtral 8x7B in performance, achieving remarkable efficiency with just 2.6 billion parameters. This article delves into the features, advantages, and significance of Gemma 2 2B in the AI field.

Image credit: Smaller, Safer, More Transparent: Advancing Responsible AI with Gemma

Gemma 2 2B: Small but Powerful AI Innovator

As Google’s latest AI model, Gemma 2 2B is compact yet delivers astonishing performance. With only 2.6 billion parameters, its performance exceeds that of many larger models with significantly more parameters.

Impressive Performance

- LMSYS Rankings: Gemma 2 2B scores higher than well-known models like GPT-3.5 and Mixtral 8x7B on the LMSYS Chatbot Arena leaderboard.

- Benchmark Scores: Scores 56.1 in the MMLU (Massive Multitask Language Understanding) test and 36.6 in the MBPP (Mostly Basic Python Programming) test.

- Significant Improvement: Compared to its predecessor, Gemma 1 2B, it has improved over 10% across various benchmark tests.

Technical Features

- Parameter Size: Only 2.6 billion parameters, much smaller than large models like GPT-3.5.

- Multilingual Support: Capable of processing multiple languages.

- Large Training Set: Trained on 2 trillion tokens.

- Knowledge Distillation: Likely uses knowledge distillation from the Gemma 2 27B model.

- Efficient Training: Trained on 512 TPU v5e units.

Lightweight Deployment Advantages

One of Gemma 2 2B’s highlights is its extremely low deployment threshold:

- INT8 Quantization: Requires only about 2.5GB of storage space.

- INT4 Quantization: Can be compressed to about 1.25GB.

This means that even standard personal computers or edge devices can easily run this AI model that surpasses GPT-3.5 in performance.

Broad Compatibility

Gemma 2 2B supports various mainstream AI frameworks and tools:

- Hugging Face Transformers

- llama.cpp

- MLX

- Candle

This broad compatibility greatly reduces the usage barrier for developers, facilitating rapid adoption and application of the model.

Significance of Gemma 2 2B

- Democratizing AI: High-performance AI models are no longer restricted to large tech companies. Small and medium enterprises and individual developers can also easily access and use them.

- Advancing Edge AI: Opens new possibilities for edge computing and on-device AI applications.

- Setting New AI Performance Standards: Demonstrates that small models can achieve or even surpass the performance of large models through sophisticated design and training.

- Optimizing Resource Utilization: Reduces computational resources and energy consumption while maintaining performance.

Future Prospects

The success of Gemma 2 2B will undoubtedly drive the AI field towards more lightweight and efficient development. We can anticipate:

- More task-specific optimized small, efficient models.

- Further expansion of AI application scenarios, especially in mobile devices and IoT fields.

- Widespread AI technology, lowering usage barriers and promoting broader innovation.

Quick Experience with Gemma 2 2B

Online Trial:

- Visit Gemma 2 2B Hugging Face Model Page

- Use Google Colab for quick testing without local installation

CPU Running Options:

- For CPU running, use the optimized version

- Visit gemma-2-2b-it-abliterated-GGUF

- Download the q4_K_M model file suitable for low-configuration devices (recommended on the above webpage)

By following these steps, you can easily deploy and use the Gemma 2 2B model in your local environment to explore its powerful AI capabilities.

Conclusion

The emergence of Gemma 2 2B marks a new stage in AI technology. It not only showcases the enormous potential of small models but also paves the way for the popularization and democratization of AI. With the continued development of such efficient models, we have reason to believe that AI technology will play a role in a broader range of fields, bringing more innovation and convenience to human society.

FAQ

Q: How can Gemma 2 2B achieve performance surpassing large models with such a small parameter size? A: This is likely due to advanced knowledge distillation techniques, meticulously designed model architecture, and high-quality training data.

Q: What application scenarios are suitable for Gemma 2 2B? A: Due to its compact and efficient characteristics, Gemma 2 2B is particularly suitable for edge computing, mobile device AI, and AI applications for small businesses.

Q: Does using Gemma 2 2B require special hardware? A: No. One of the significant advantages of Gemma 2 2B is that it can run on standard personal computers and even some high-end mobile devices.

Q: Is Gemma 2 2B open source? A: Gemma Terms of Use

Q: What impact does Gemma 2 2B have on the AI industry? A: The success of Gemma 2 2B may drive the entire industry to focus more on developing small, efficient models and promote the expansion of AI technology into broader application scenarios.